- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Schedule Publications for your Stories and Analyti...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member19

Active Participant

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

2020 Feb 20

8:13 AM

28,266

- SAP Managed Tags:

- SAP Analytics Cloud

With the latest release on Wave 2020.03 for Fast track Tenants and for Quarterly release Tenants cycle with 2020 Q2 QRC, SAP Analytics Cloud is introducing one of the most asked features on Scheduling Stories and Analytical Applications. We call it Schedule Publications. With this, you would be able to Schedule a Story and also an analytical application with recurrence and distribute the same as a PDF over email to a number of different SAC and Non-SAC recipients. Want more, you can even include a customised message in the email per the schedule and as well attach a link to the view of the story in online mode which can be used to check the latest online copy of the story / analytical applications.

Note :

You can create a single schedule or even a recurring one with a defined frequency like hourly, daily, weekly.

Before we dive into the complete details on what you need and How to create your first schedule, Let's learn some of the terminologies used with-in document:

SAC Data Centers where Scheduling Publications is supported:

Schedule publications are available in tenants which are provisioned on top of the SAP Cloud Platform Cloud Foundry environment. The list is as below:

Yes from the above list , The latest addition with a patch update on the Ali Cloud data center starting the versions 2020.8.11 for QRC based tenants and for the fast track tenants , starting on 2020.11.2 and 2020.12.0. Depending upon whichever groups you belong to and the version , you would get this update on your Tenant.

What you need to get started?

At first, Schedule Publications needs to be enabled by your organisation SAP Analytics cloud admin on the Tenant level. To do the same, Please log in as Admin and go to System->Administration and enable the Toggle

"Allow Schedule Publications"

If you want to allow your schedules to be sent over to Non-SAC users as well along with SAC users, Please enable the toggle option

"Allow Schedule Publication to non-SAC users"

Schedule Publications is not by default enabled to all users in your organisation , Your admin needs to assign to a template who would have rights for creating schedules. To do the same . under the SAC Tenant application menu, Go under the Security->Roles and click on any existing role where you would like to add Schedule Publications right.

How to create Schedule Publications?

Once a user has been granted access to create schedules,

Scheduling Story based on Live connection

While scheduling story based on live connection user need to ensure certain settings are enabled and configured correctly, if the settings are not done correctly the schedule will fail.

Please refer to the other related blogs for more information (This space would be updated as and when more blogs appear)

Number of Publications available under your current SAC licenses

How to schedule an Analytics application Publications

Defining Prompt values while creating or editing Schedule Publications in SAP Analytics Cloud

FAQ’s on Scheduling Publications in SAP Analytics Cloud

Schedule publications in sap analytics cloud

Manage all schedules created by different users under one view

Video

Note :

- Schedule publications would be available only for SAP Analytics cloud Tenants based on the AWS data center(Cloud foundry based), and Microsoft Azure based

- In china , we support in Alicloud based SAC Data centers.

- A minimum of 25 or more SAC licenses are required to make use of this feature. It can be a total of all types of SAC licenses

You can create a single schedule or even a recurring one with a defined frequency like hourly, daily, weekly.

Before we dive into the complete details on what you need and How to create your first schedule, Let's learn some of the terminologies used with-in document:

- What is a Schedule Publications engine?

A Schedule Publications engine is a part of the SAP Analytics cloud hosted in the cloud responsible for creating the schedule and generate publications and send it to different recipients over an email. - What is a Publication?

A publication is an end output of a Story / Analytics application Content which the Schedule

Publications engine generates.

With the first cut of this release, the first publication format supported is PDF. - What is the Destination?

The destination is where an end publication generated by Schedule Publications engine would be sent to the recipients.

With the first cut of the release in Wave 2020.03 and 2020 Q2 QRC, email is the first destination supported. - What is Recurrence?

You can define a recurrence pattern for your schedule which would be taken care of by the schedule publications engine in the background to run and distribute the publications to different recipients.

With the first cut of the release in Wave 2020.03 and 2020 Q2 QRC, recurrence supported is hourly, daily, weekly. - What are SAC Users and Non-SAC Users?

Users who are registered in the same SAC Tenant are called SAC users and Users who are not a part of the SAC Users list are the Non-SAC users. Non-SAC Users are basically external users. - How about the authorisation of the publications ?

Schedule owner authorisations would be rolled out to all the recipients who receive the publication which means the content sent to different users would be exactly how the schedule owner would have seen the same story and the views(using bookmarks).

SAC Data Centers where Scheduling Publications is supported:

Schedule publications are available in tenants which are provisioned on top of the SAP Cloud Platform Cloud Foundry environment. The list is as below:

AP11-sac-sacap11

CF

AP12-sac-sacap12

CF

BR10-sac-sacbr10

CF

CA10-sac-sacca10

CF

CN40-sac-saccn40

CF

EU10-sac-saceu10

CF

EU20-sac-saceu20

CF

JP10-sac-sacjp10

CF

US10-sac-sacus10

CF

US20-sac-sacus20

CF

Yes from the above list , The latest addition with a patch update on the Ali Cloud data center starting the versions 2020.8.11 for QRC based tenants and for the fast track tenants , starting on 2020.11.2 and 2020.12.0. Depending upon whichever groups you belong to and the version , you would get this update on your Tenant.

What you need to get started?

At first, Schedule Publications needs to be enabled by your organisation SAP Analytics cloud admin on the Tenant level. To do the same, Please log in as Admin and go to System->Administration and enable the Toggle

"Allow Schedule Publications"

If you want to allow your schedules to be sent over to Non-SAC users as well along with SAC users, Please enable the toggle option

"Allow Schedule Publication to non-SAC users"

Schedule Publications is not by default enabled to all users in your organisation , Your admin needs to assign to a template who would have rights for creating schedules. To do the same . under the SAC Tenant application menu, Go under the Security->Roles and click on any existing role where you would like to add Schedule Publications right.

How to create Schedule Publications?

You can create a schedule IF

- If you are a BI Admin or an Admin. By default, these roles come with the Schedule Publication permission.

- If the Schedule Publication permission has been assigned to a custom role created.

- If you have a Save or Save As permission to a story once the Schedule Publication permission is given.

Once a user has been granted access to create schedules,

- Select the Story / Analytical application under the browse files (By using the checkmark) and then choose the option Share ->Schedule Publications

- The other way is to open a Story and the again go under share option and select Schedule

Publication

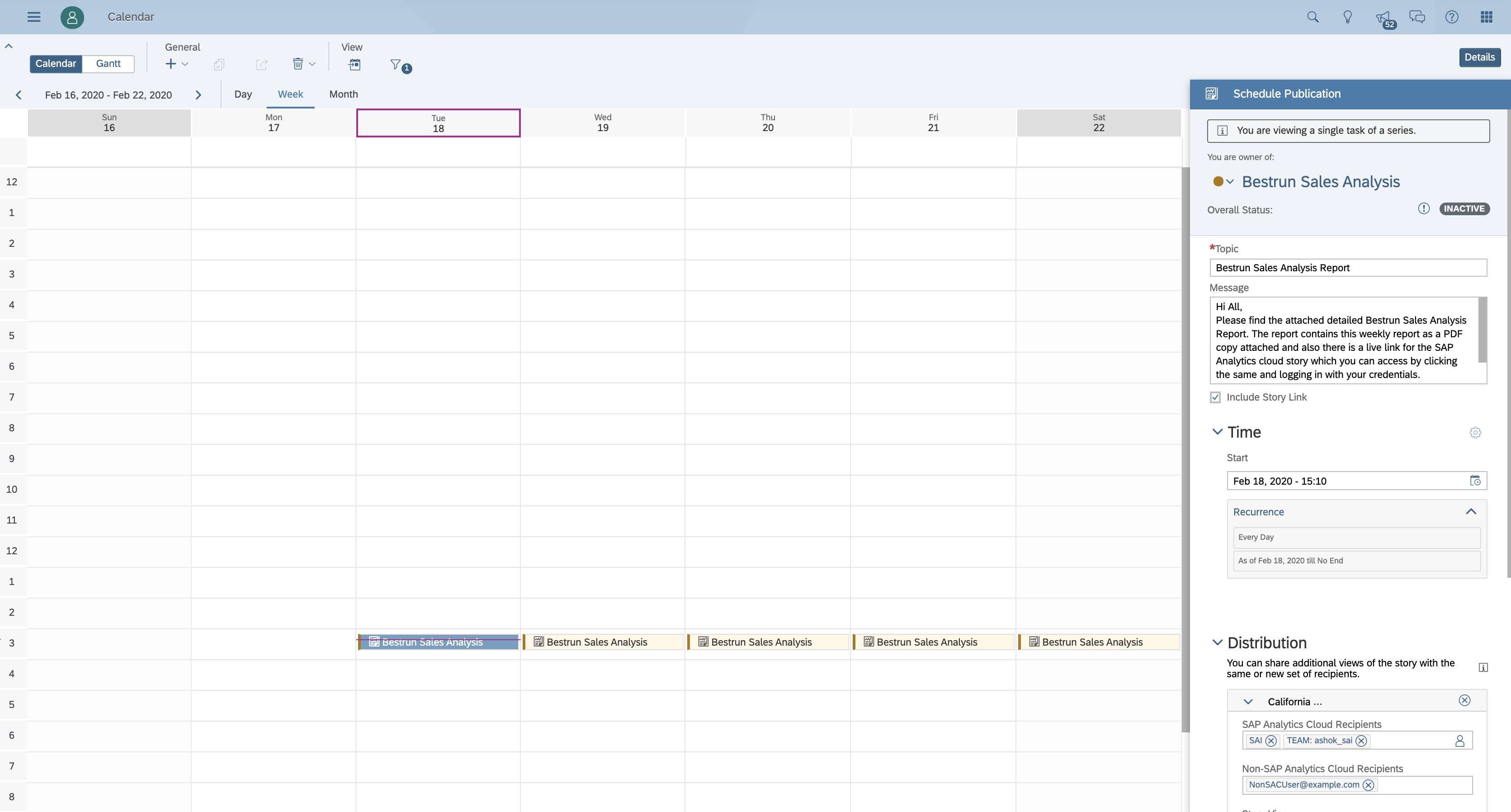

- Once the Schedule Publications Dialog box opens, Input the details as required. Let me elaborate on the different options here.

Name : Provide a name for your Schedule

Start : Provide a start date for your schedule with a defined time, You can add recurrence

details as well by selecting the option "Add Recurrence". Under Recurrence, you can define the recurrence pattern to be hourly, daily, weekly as different options and also the number of times needs to be repeated including the end of occurrence details.

Topic : This is the subject for the email which would be delivered to the recipients

Message: This is the message body for the email which would be sent to the recipients over emailInclude Story Link : If you select this checkmark, then the story/ analytics application link would be sent along with the email. If you happen to personalise the publication by selecting a bookmark to be delivered(Given below) , then the personalised bookmark view link would be embedded.Distribution : Here , you can define the view of the story which needs to be delivered to the recipients. You can personalise different users or teams with different views of the same story to be delivered with the help of bookmarks available for stories. If you stories has multiple bookmarks where each of the bookmarks are relevant for different users/teams, you can make use of the same , else create one. The advantage you find with the bookmarks is you can create a unique personalised view by applying different filter options and create views. Eg : A story having for multiple states and you can create different bookmarks with combinations of multiple different states and as well including other dimension combinations.

- Distribution (Continued): You can create one or more than view (as story default view or different bookmarks) which can be delivered to different SAC users/teams. Let's focus on one view and understand all options.Next to the Downarrow, Double click "View1" and provide a name for your view. Below screenshot describes to be "California Sales Managers"

- SAP Analytics Cloud Recipients : Click the person icon and select the different SAC user recipients or teams

- Non-SAP Analytics Cloud Recipients: These are the users who are not a part of SAC user lists or a part part of SAC tenant . You can include their email address by manually typing their addresses. Under the default SAC Analytics Cloud licensing, Per View , You can input a maximum of 3 Non SAC Recipients.

- Story View : Choose the Story/Bookmarks view which you want to deliver to the above recipients . You can choose between Original Story , Global Bookmarks and as well My Bookmarks. the authorisation on the story publication would be same as schedule owner and the exact view would be delivered to different recipients .

- File Name : Name of the publication which would be delivered to the recipients

- PDF Settings : You can select this option to define the PDF settings like what all pages you want to deliver, the grid settings for different columns and rows selection, choose to insert appendix which has details on metadata information on the story.

Once you are done inputting all the details, Thats it ! Click OK and create your Schedule.How to view my Schedules created and as well how can i Modify?You can view the Schedule created under the Calendar view . Go to the SAC application menu and select Calendar. You can see the schedule created right there. If its recurrence schedule, then you would see against multiple different dates /time as defined by the schedule owner.You can as well modify a single recurrence or the entire series occurrence. Select the occurrence from the calendar view and on your right side, a new panel opens where you can modify.

Once you are done inputting all the details, Thats it ! Click OK and create your Schedule.How to view my Schedules created and as well how can i Modify?You can view the Schedule created under the Calendar view . Go to the SAC application menu and select Calendar. You can see the schedule created right there. If its recurrence schedule, then you would see against multiple different dates /time as defined by the schedule owner.You can as well modify a single recurrence or the entire series occurrence. Select the occurrence from the calendar view and on your right side, a new panel opens where you can modify.

As and when the clock ticks its time, The Schedule publication picks the job and creates the publications and send it to the different recipients defined as an attachment over email. The maximum mail delivery size allowed per email including attachment is 12MB.Schedule Publications in itself is a resource intensive tasks which includes the Schedule publications engine on the cloud hosted on SAP Analytics cloud do a variety of jobs in the background for creating the publications including the email delivery. Out of the box with the standard licensing you would get limited number of schedules .

Scheduling Story based on Live connection

While scheduling story based on live connection user need to ensure certain settings are enabled and configured correctly, if the settings are not done correctly the schedule will fail.

- Make sure that the “Allow live data to leave my network”switch is enabled from System-> Administration->Datasource Configuration

- Go to Connection on which story is create and configure Advanced setting

- How to configure configuration for advanced setting has been mentioned in Live Data Connections Advanced Features Using the SAPCP Cloud Connector

- Once this Advanced feature configuration has been done successfully, you can schedule a story created on this connection

Please refer to the other related blogs for more information (This space would be updated as and when more blogs appear)

Number of Publications available under your current SAC licenses

How to schedule an Analytics application Publications

Defining Prompt values while creating or editing Schedule Publications in SAP Analytics Cloud

FAQ’s on Scheduling Publications in SAP Analytics Cloud

Schedule publications in sap analytics cloud

Manage all schedules created by different users under one view

Video

Labels:

42 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

Advanced Event Mesh

1 -

ai

1 -

Analyze Workload Data

1 -

BS Reclassification

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,657 -

Business Trends

214 -

CAP

1 -

cf

1 -

Characteristics display

1 -

Classes

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Databricks

1 -

Datasphere

2 -

Event Information

1,396 -

Event Information

173 -

Expert

1 -

Expert Insights

178 -

Expert Insights

745 -

General

2 -

Getting Started

2 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Introduction

1 -

Kafka

1 -

Life at SAP

779 -

Life at SAP

43 -

MDG materials

1 -

MDGM

1 -

Migrate your Data App

1 -

MLFlow

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,562 -

Product Updates

821 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

2 -

SAP Datasphere

2 -

SAP Datasphere تحقيق أقصى استفادة من بيانات الأعمال

1 -

SAP MDG Data Quality Management

1 -

SAP MDG DQM

1 -

SAP s4hana cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technical article

1 -

Technology Updates

6,863 -

Technology Updates

991 -

Workload Fluctuations

1

Related Content

- Which S/4HANA Extensibility Options should I use as a SAP customer? in Technology Blogs by Members

- Start Your scripting Journey The Easy Way With SAP Analytics Cloud | Part Four in Technology Blogs by SAP

- Discover TechEd “Live” at SAPinsider EMEA 2024 in Technology Blogs by SAP

- Calling All SAP Partners: Join the SAP Customer Engagement Initiative Cycle-3 2024! in Technology Blogs by SAP

- SAP is a Visionary in 2024 Gartner® Magic Quadrant™ for Enterprise Low-Code Application Platforms in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 18 | |

| 12 | |

| 9 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |