- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Datasphere connection with Confluent Cloud

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

For being able to do write this Blog I worked together with Perry Krol from Confluent who is very experienced! Big thanks to him for his help!

The target connection is already available and that's the topic of this Blog.

For being able to connect to Confluent Cloud, I have to do some steps before I can start. Customer usually already have a Cluster in Confluent Cloud up and running and all connection details available, but I want to show how it would look like, when nothing is available.

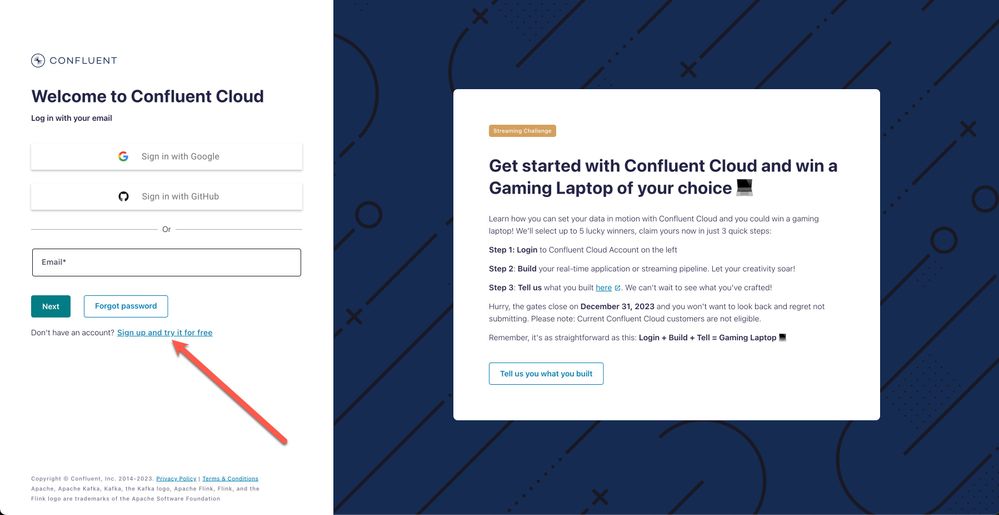

First, I have to create a free account on https://www.confluent.cloud

These steps can be followed as well on the documentation website of Confluent, where all details are explained: https://docs.confluent.io/cloud/current/get-started/index.html

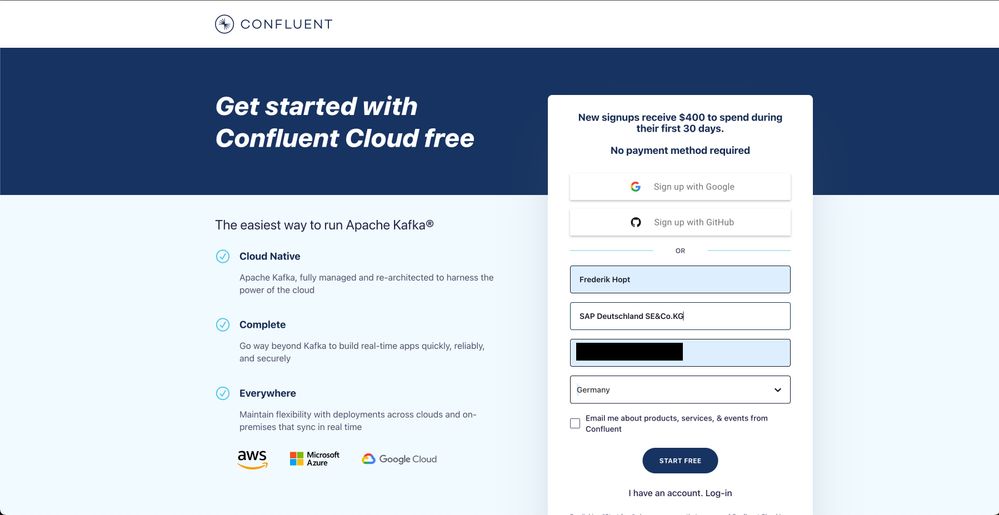

Now you must click on "sign up" or login, if you have already an account. Then I have entered the necessary information for a free trial version.

A cool tip I got from Perry is the append of a "+" in your email address (only gmail accounts as far as I know...). If you add a "+xyz" in your emailadress, you have a "new" email address alias, but it will be send to your original address! So, for example having company@gmail.com and using company+abcd@gmail.com will be send to company@gmail.com.

You can read about in in this blog: https://gmail.googleblog.com/2008/03/2-hidden-ways-to-get-more-from-your.html

After clicking on "Start free" you will get an email where you must verify your email to activate your account:

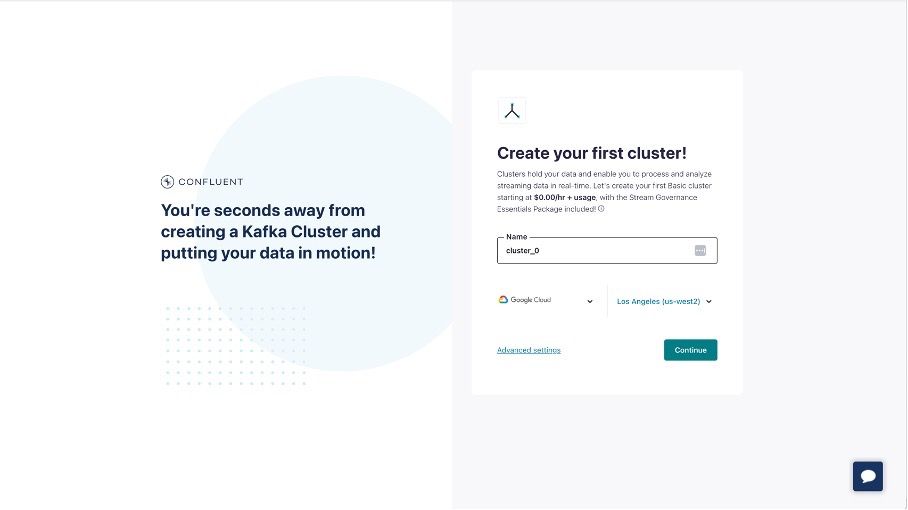

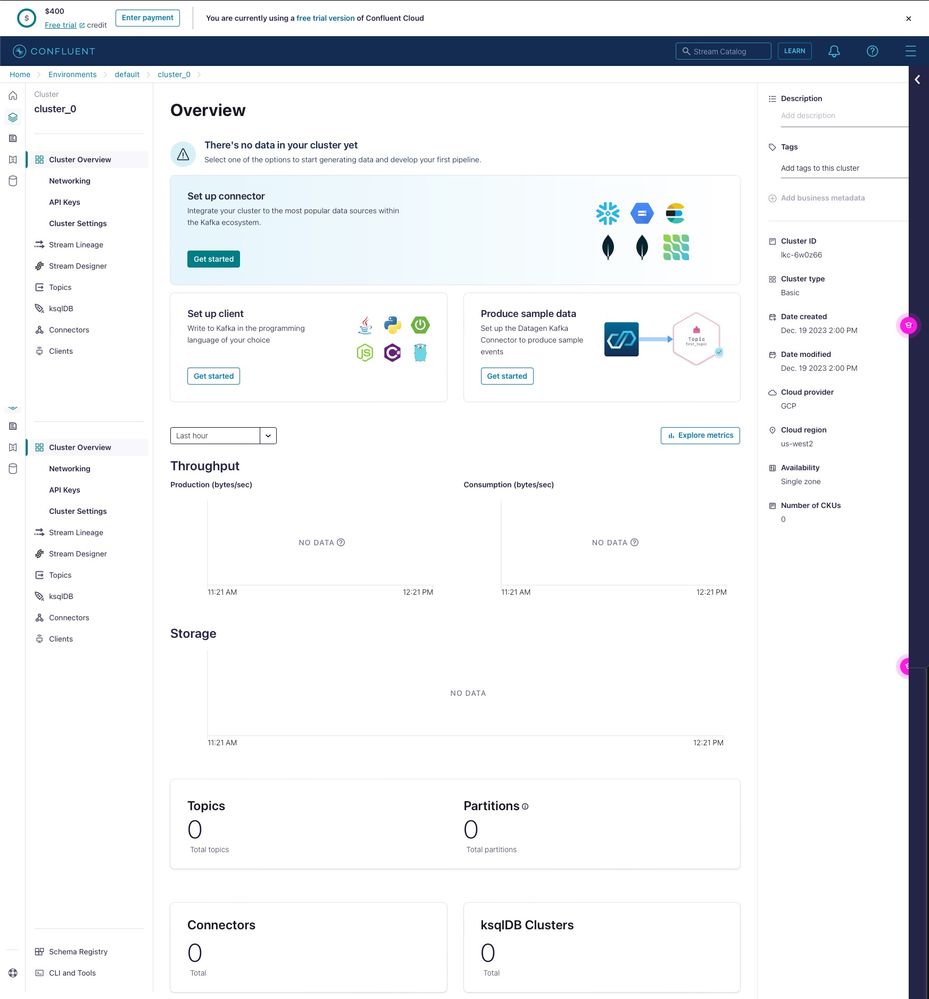

After you have verified your email address with Confluent Cloud, you’re asked to set a Password for your new Confluent Cloud user account that will be used to authenticate via the Confluent Cloud Console. After you’ve set your password, a next dialog shows up to help you launch your first Kafka cluster on Confluent Cloud. I’ve used the default settings, which means a Basic cluster will be created on the Google Cloud Platform in the us-west2 region.

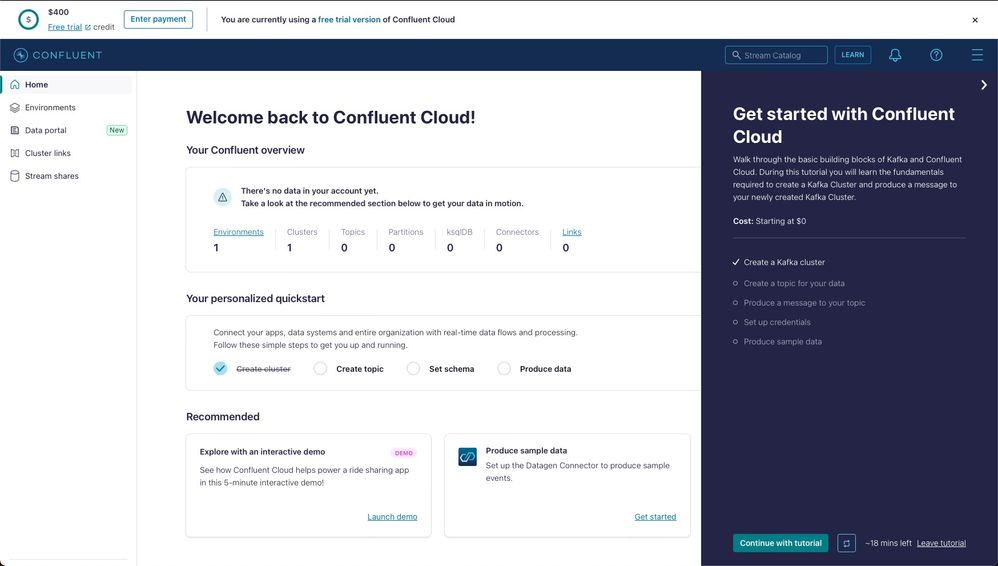

The next screen in the dialog asks you to optionally add a payment method, but as part of our new Confluent Cloud signup, we have received $400 Confluent Cloud credits to spend during their first 30 days. As such, we skip this step.

In the next screen of the dialog, we can see that our Confluent Cloud Cluster has been launched and is ready to be used.

Be informed, that as soon as you have created an account, you will get some emails from Confluent which try to help you to get started...

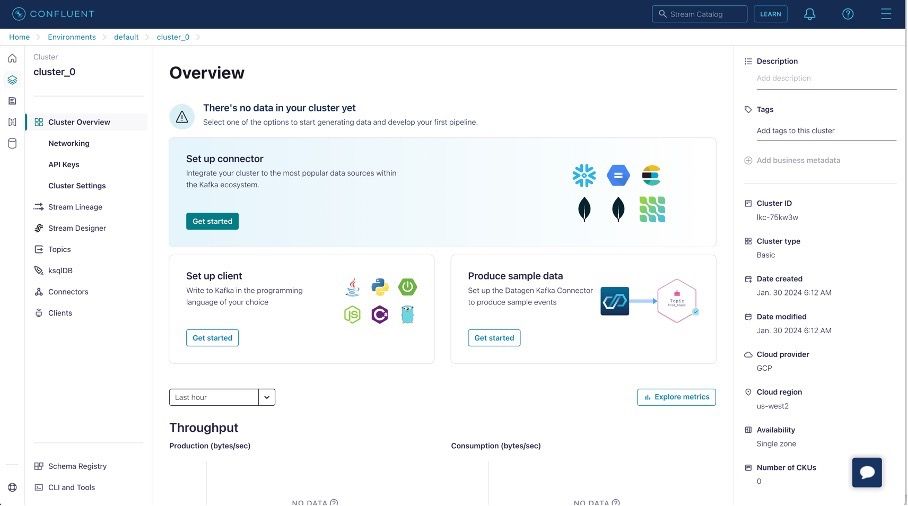

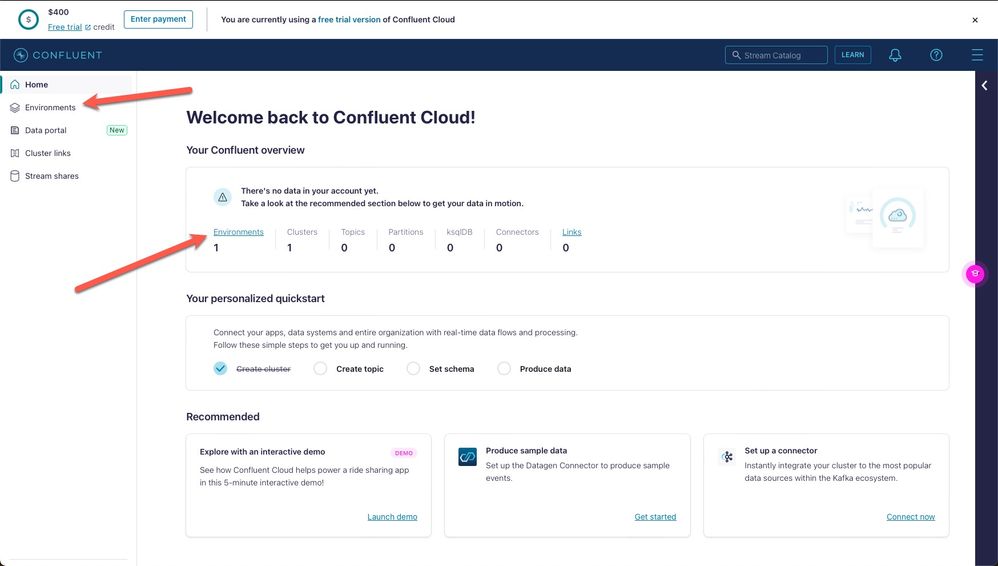

When you login later again to your new Confluent Cloud account you can see the start screen:

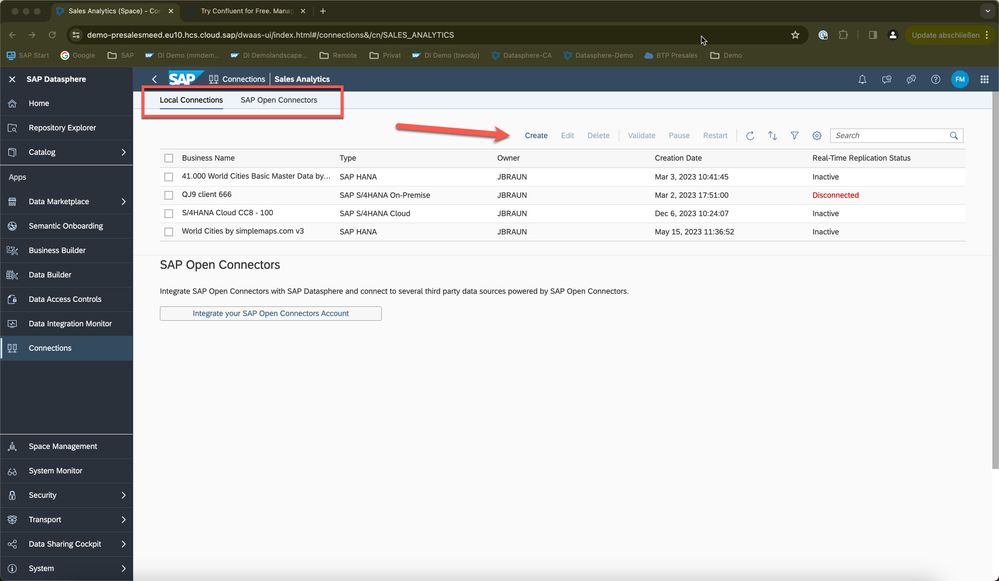

Now we switch to SAP Datasphere to create a connection to S/4 HANA as source. I'm using our Demo environment where I have the user rights to create a connection...

After my login to the system, I can see the Home screen:

Then I have to go to "connections" on the left menu:

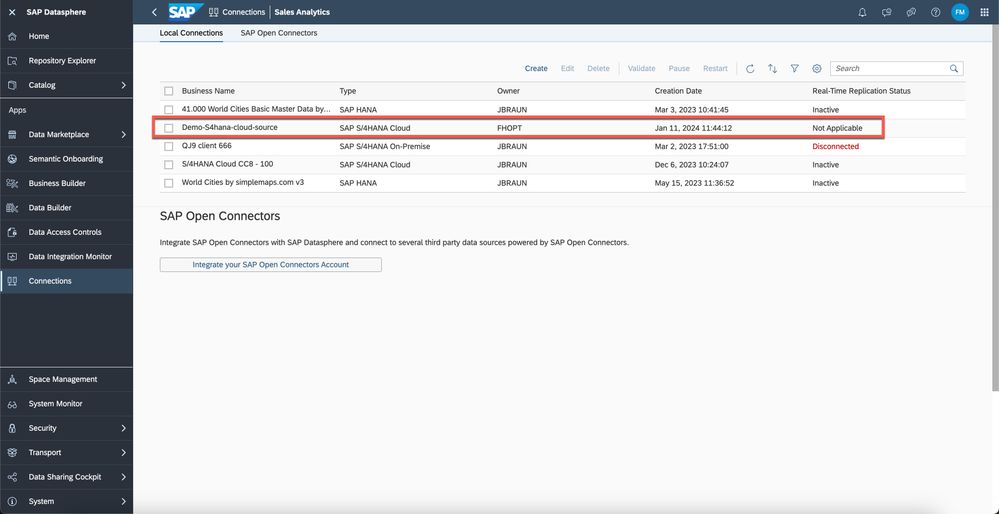

Now I need a space where the connection will be stored, and the data is written to. I select the "Sales Analytics" in my case. This name is different for any customer because the spaces are individual selected. In the Space itself I can select either a local connection or an "SAP Open Connector". In my case I will create a new connection in the local connections:

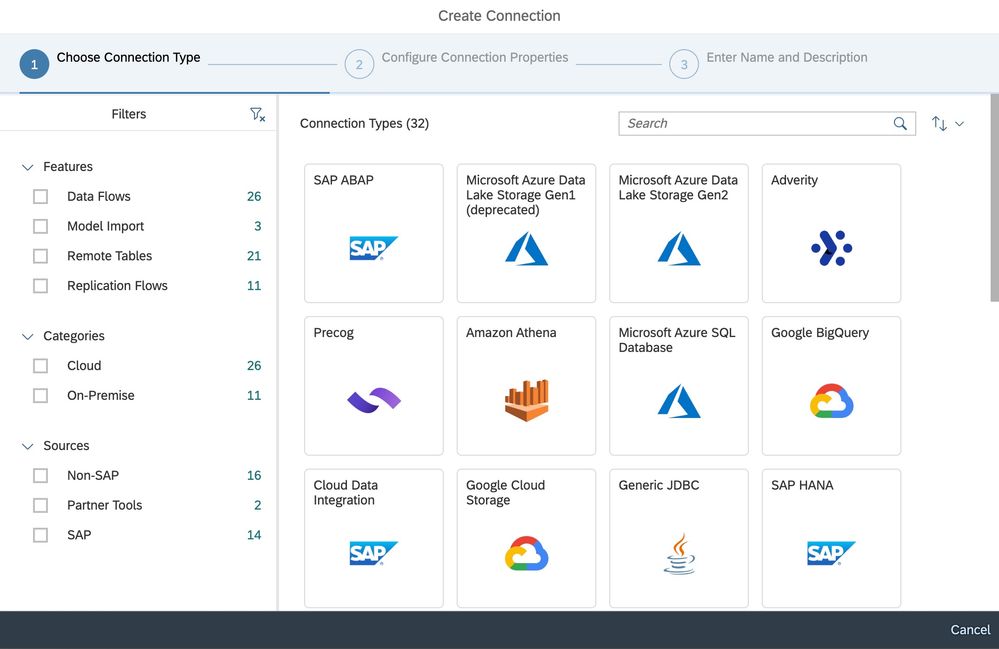

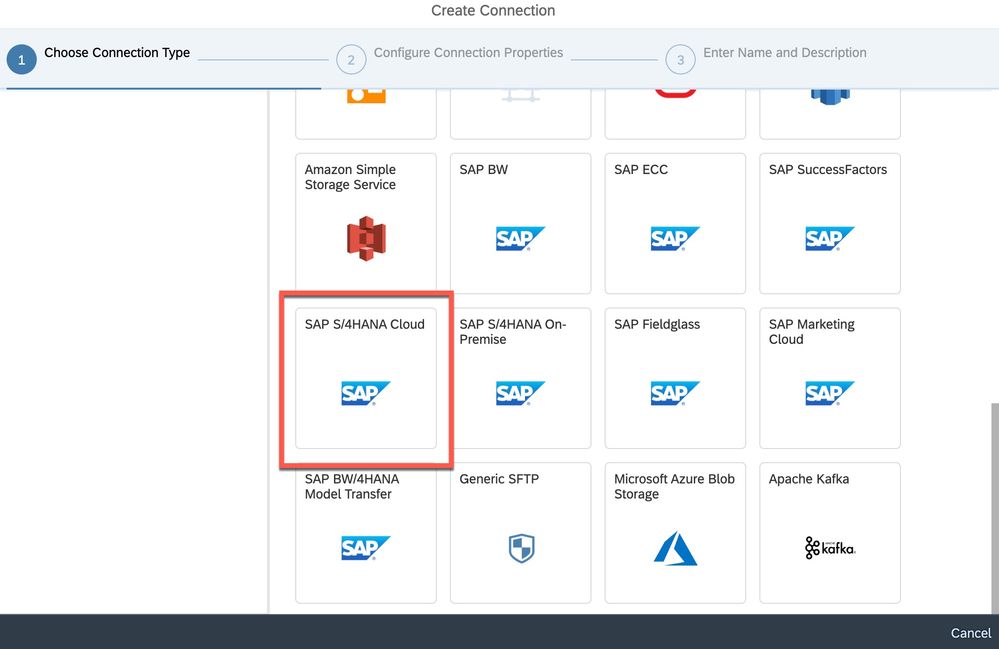

This Popup window opens, and I have to select the corresponding connector I want to use. I have to scroll down to S/4 HANA Cloud because I want to get data out of this system:

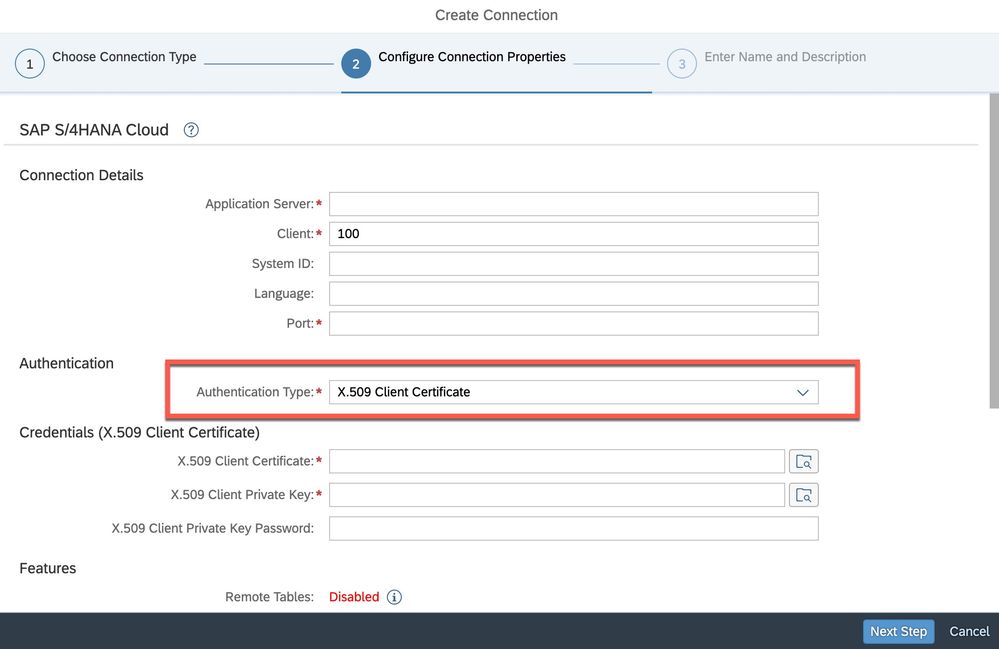

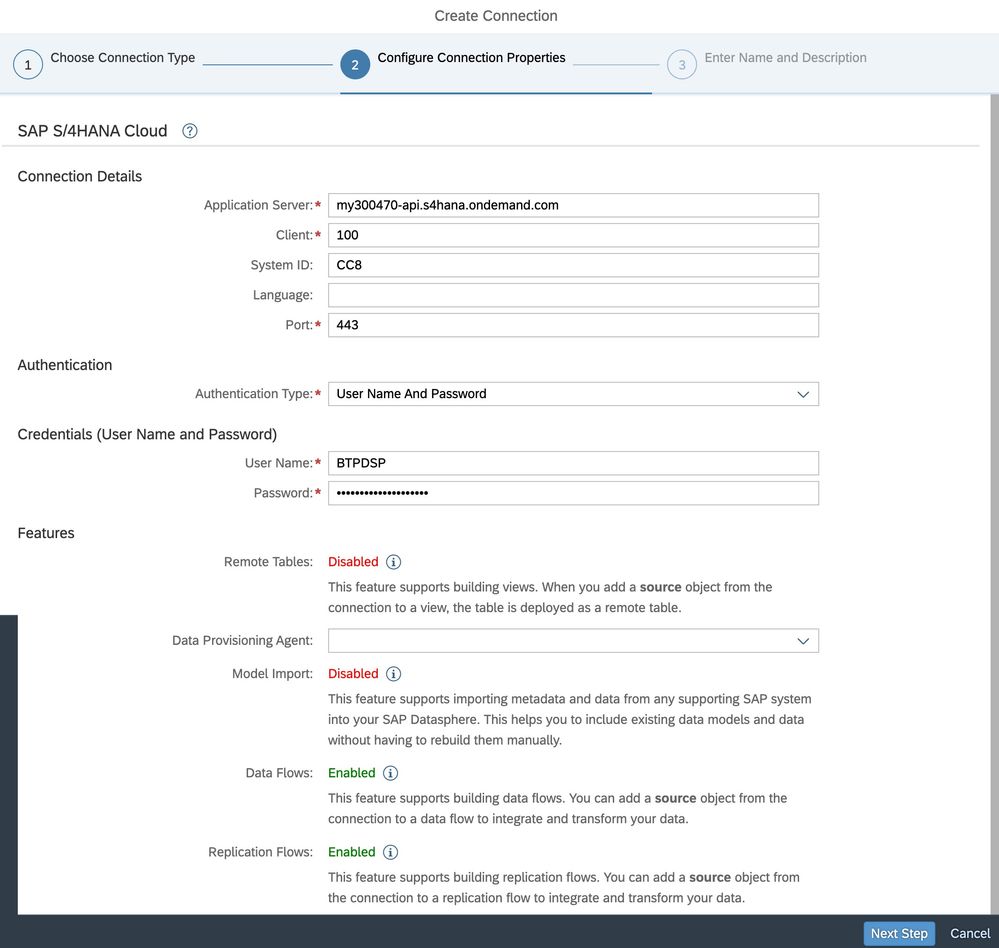

Now I can enter the connection details, but I have to select another Authentication method. At customers and production systems you typically use Certificates. for this demo I'm selecting username and password, because it's easier to create...

At the end of the popup windows, you can see the possible Features of this connection.

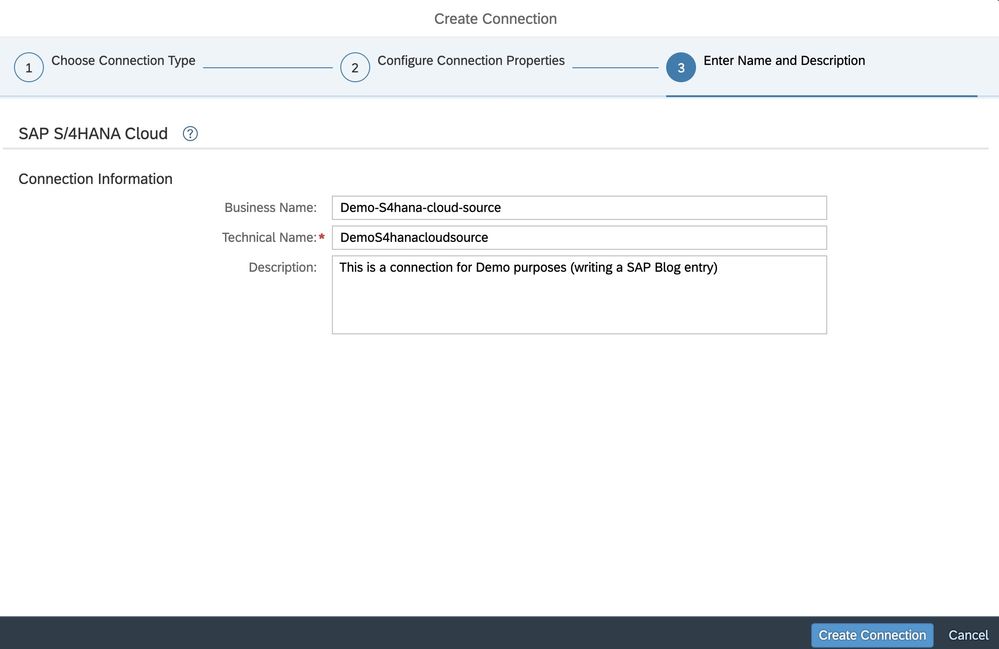

Now I need to click on "Next Step" and I have to enter a Business Name to better understand the purpose of this connection:

And after clicking on "Create connection" I can see the connection created and I can start to use it:

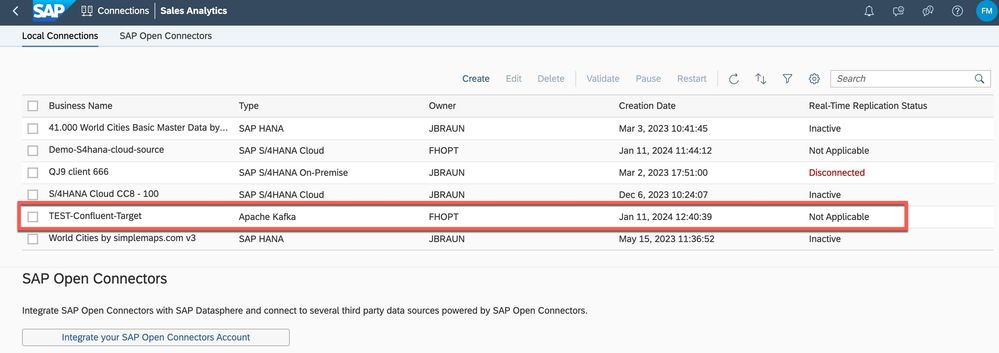

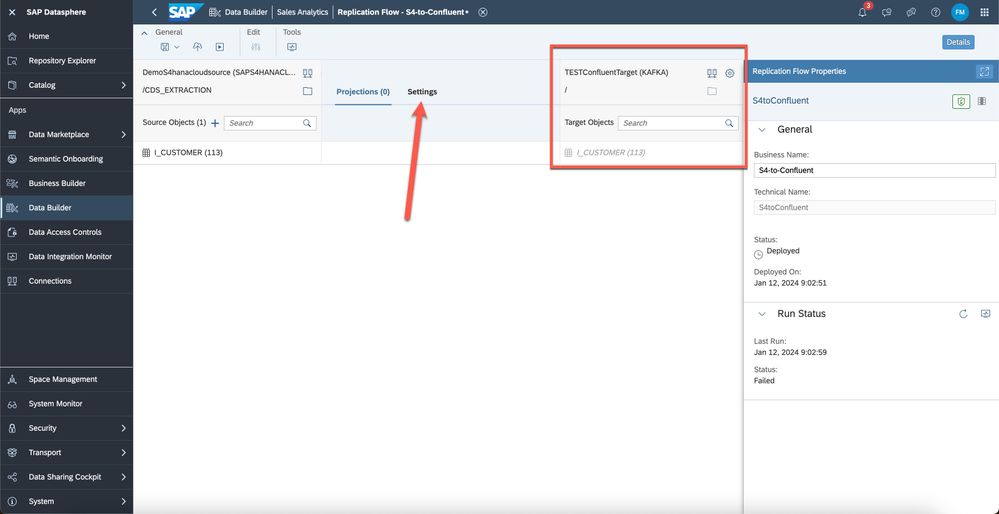

We have now created the source we want to use in our replication service but we need to create a target connection as well. This shall be our Confluent Cloud Cluster so I have to do the same procedures for Confluent:

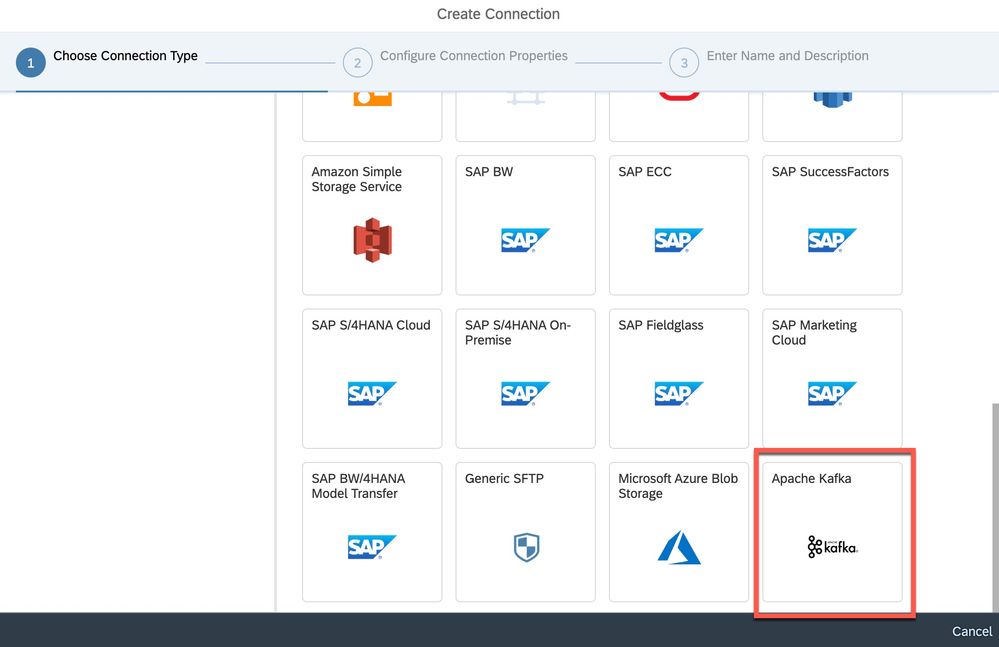

I'm using the "Apache Kafka" Connection and then I have to enter the Details, but I need to go back to Confluent first to see all connection details...

So, I'm switching to Confluent Cloud where I have to credentials to authenticate against the newly created Confluent Cloud cluster. For application credentials we will generate an API key, and while in the Confluent Cloud Console I can also see the other connection details:

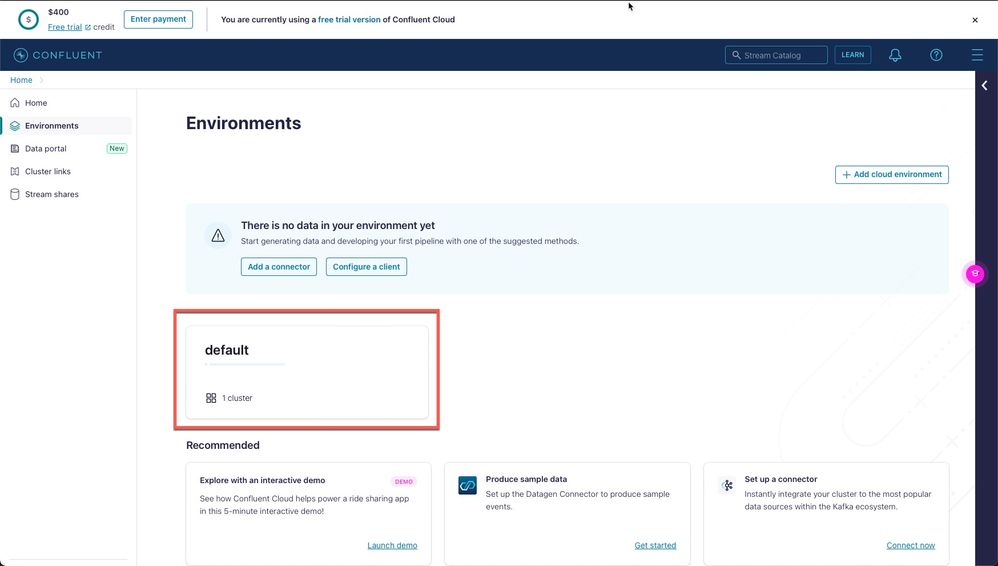

There is a default environment already created which we will use:

Now I can click on the Cluster itself to see more details:

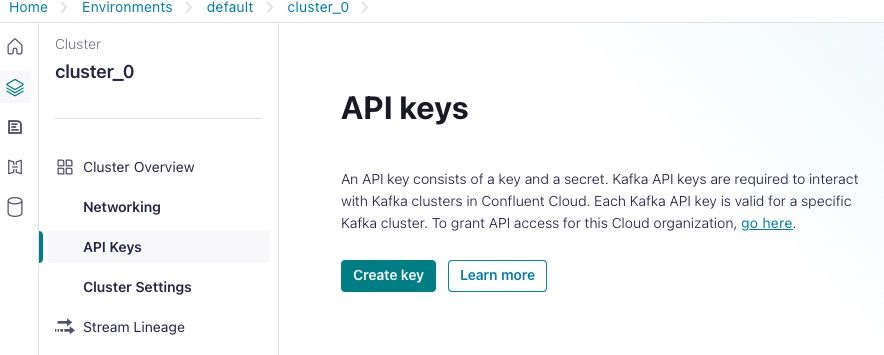

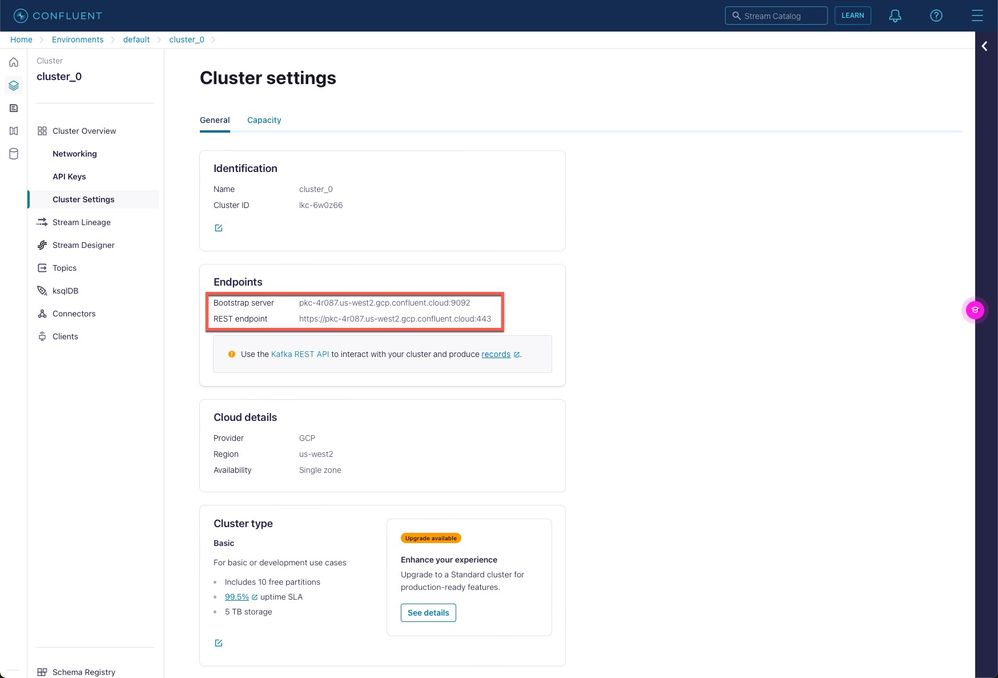

For the connection details I need to create credentials, and to keep things simple, we will generate an API key, what I can do by clicking on the left menu:

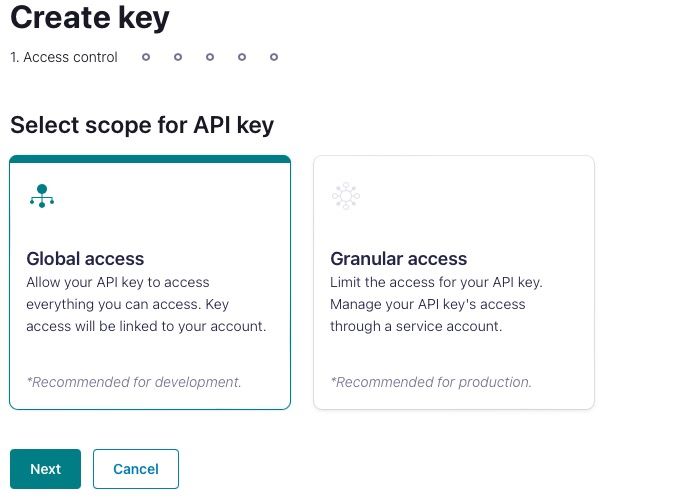

To manage fine grained access control to the resources in the Confluent Cloud Cluster, we need to make a decision on the authorization scope we want to assign to the API Key. There are 2 possible selections. I will use the first one "Global access", which will inherit the full administrative access rights from the Confluent Cloud user-account that we use in the Confluent Cloud Console, to avoid the complexities of granting specific authorization rights to the API Key during this initial walkthrough :

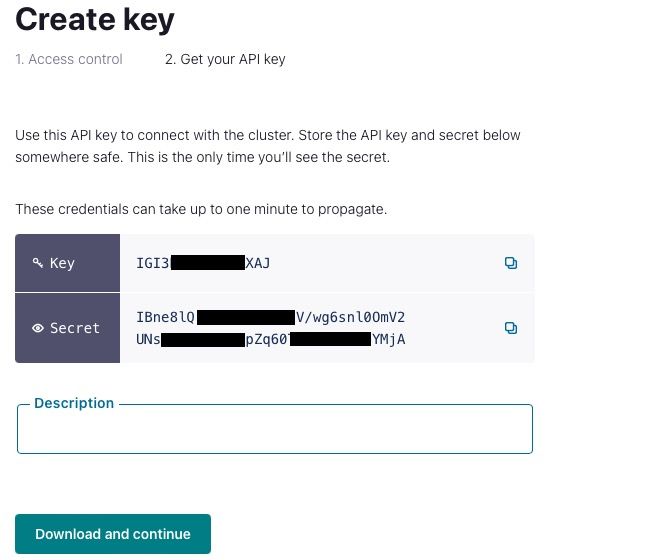

And as soon as I click on the "next" button, the key is created!

Please take care of the credentials and store the somewhere! Additionally, you can enter a Description if wished and then select "Download and continue". The key and the secret will be downloaded into a text file on your computer.

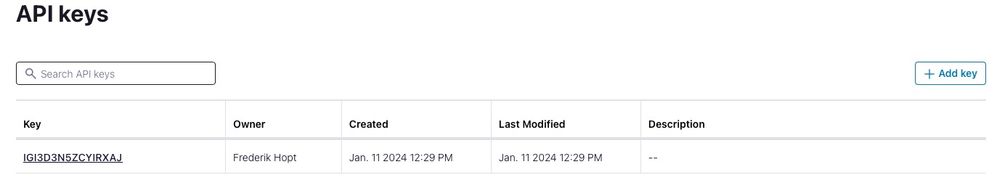

Now you can see the list of all keys you have created:

When you click again on the created key, you can see some information but not the secret anymore!

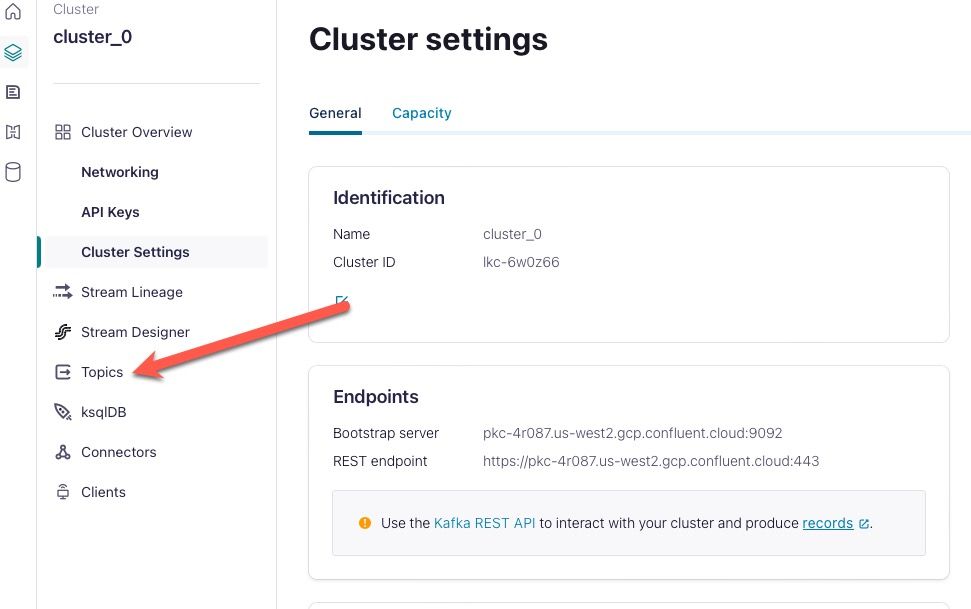

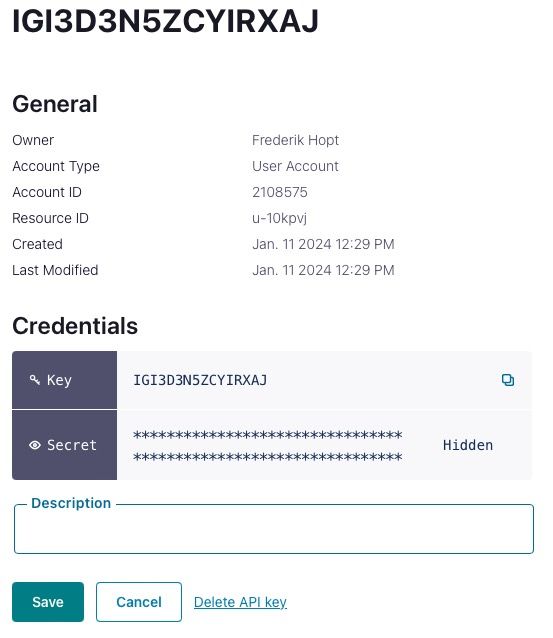

Now, I want to have the server names and the ports for entering in the connection details in Datasphere. Therefore, I select the menu option "Cluster settings" on the left side and I can see all necessary details:

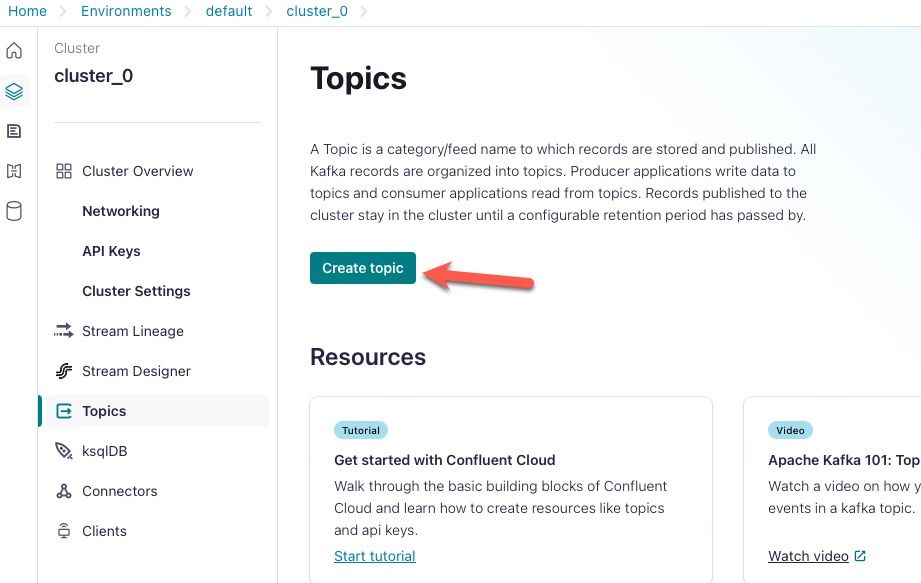

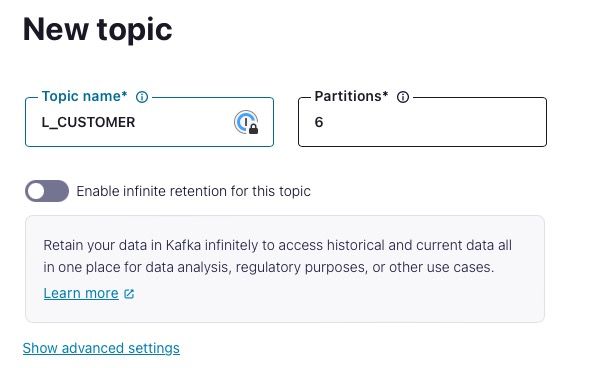

The next step is to create a so called "TOPIC", by clicking on the "Topic" menu on the left side:

Now click on "Create topic":

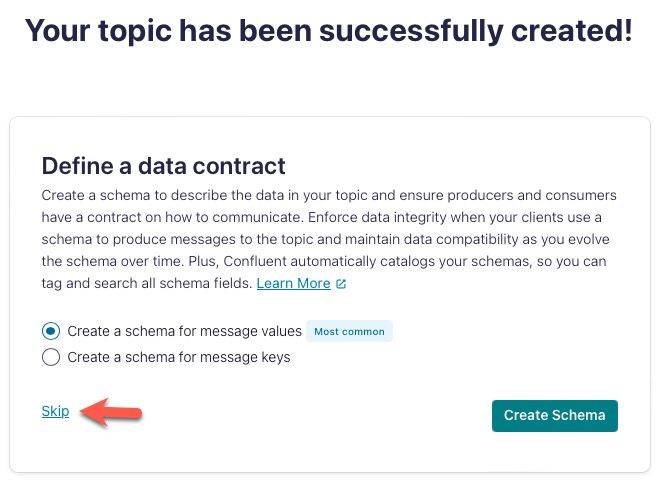

Now enter the name for the Topic. Take care to use the same name as the CDS_View otherwise it will not work! and let the rest as it is and click on "create with defaults":

Again, I didn't enter anything in the next window and clicked on "skip":

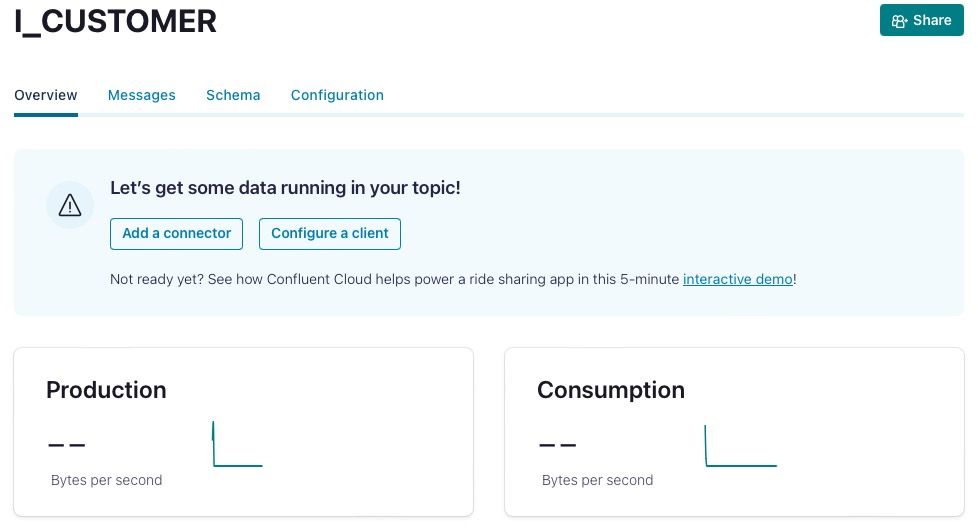

Now the Topic is created, and I can start the replication flow creation in SAP Datasphere:

In SAP Datasphere:

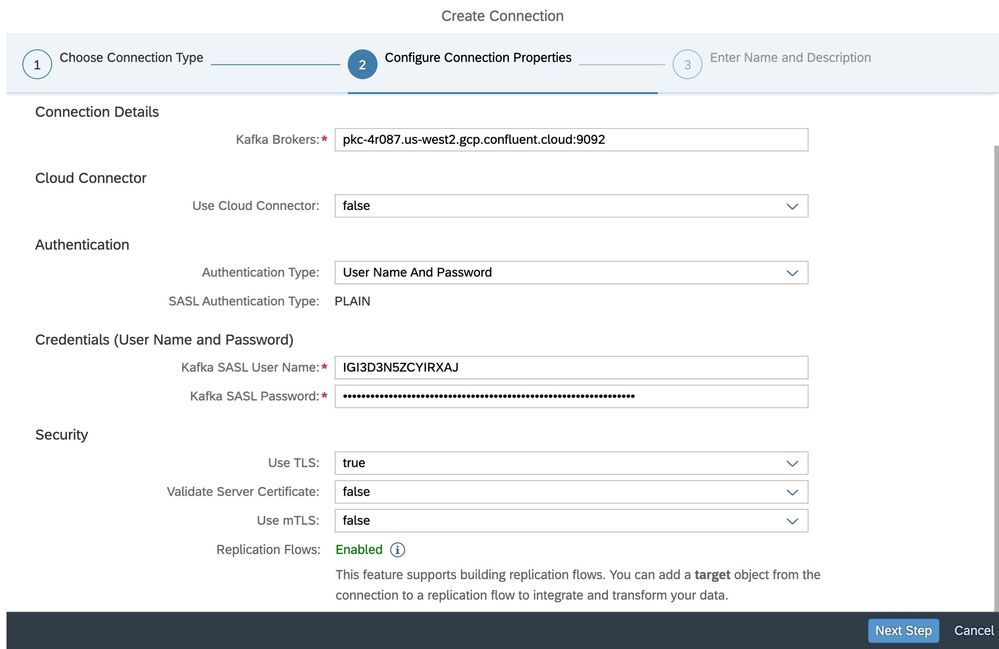

With all the settings I create the connection to Confluent in the connection menu:

Here comes now an important topic! When using Confluent Cloud with API Keys, it effectively uses the SASL/PLAIN authentication method from Kafka. SASL/PLAIN uses a simple username and password for authentication. Therefore in the Connection setup, Kafka SASL User Name is the API KEY and the password is the "API secret"!

Additionally, you have to select the "USE TLS" Option as "true", as Confluent Cloud uses TLS encryption for all application connections!

At the end just click on "Next Step" to create a Business Name for the connection to better identify it:

And the result looks like that:

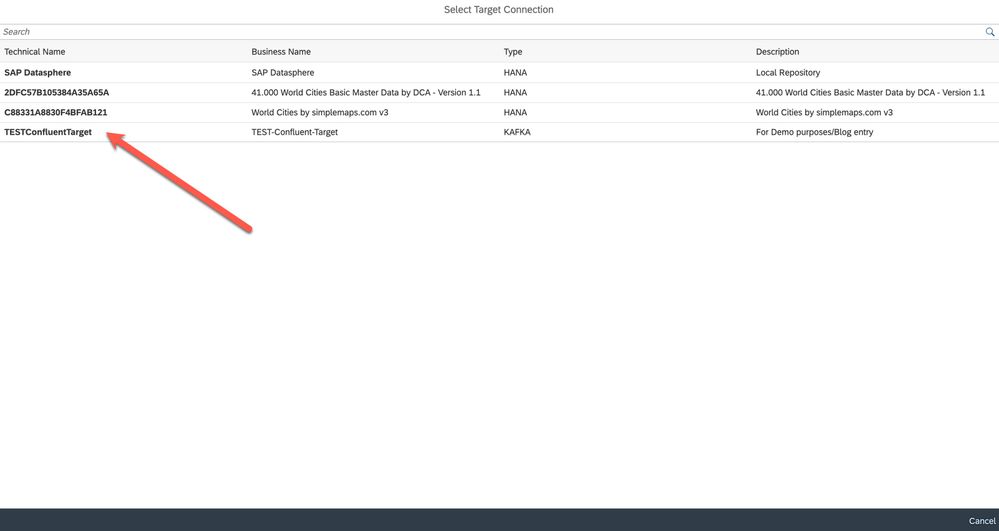

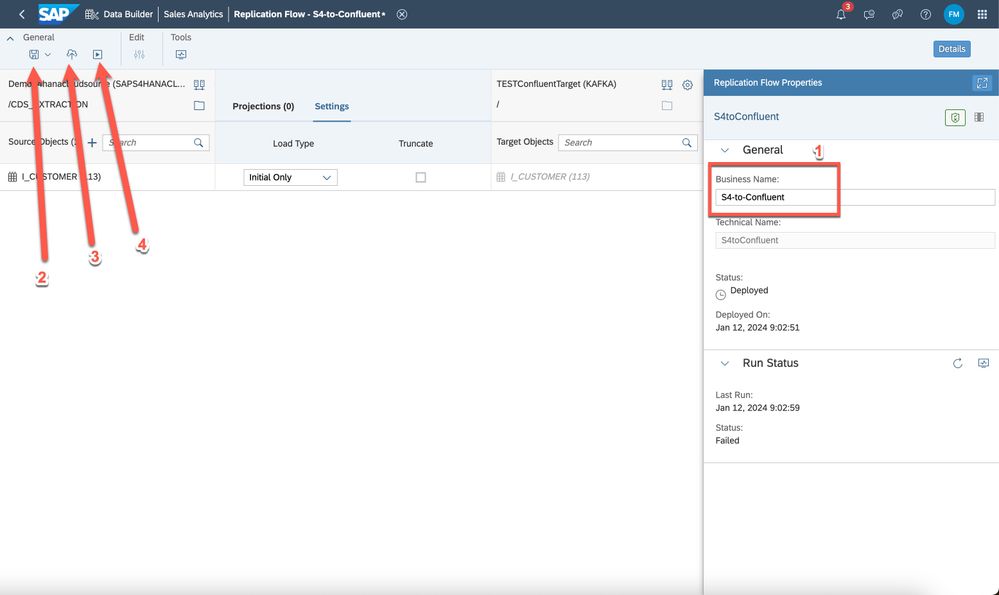

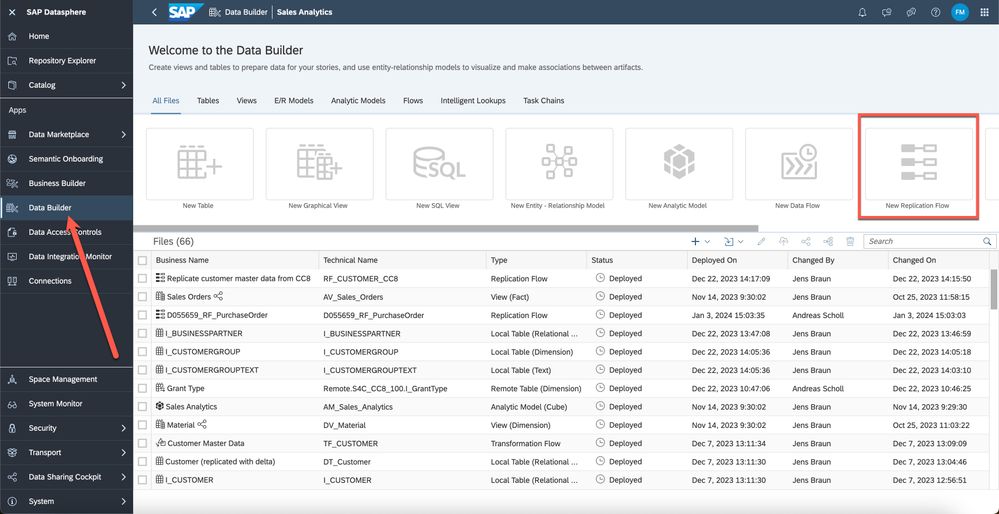

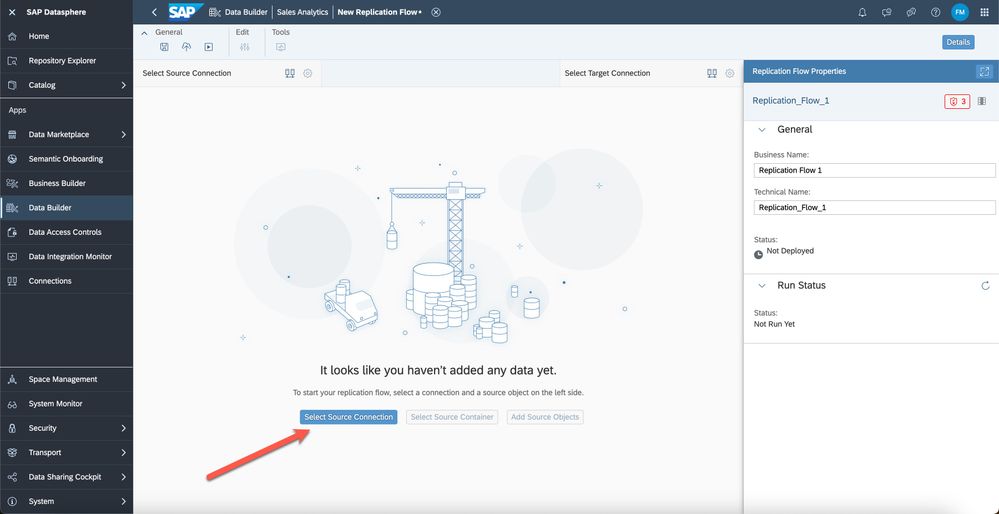

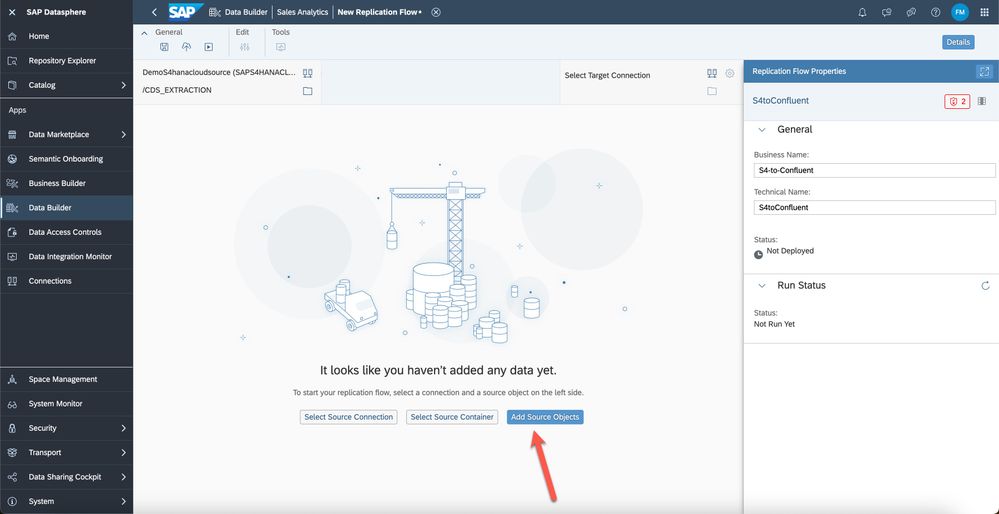

So now we can create a Replication Flow to get data out of S/4 HANA cloud and replicate them to Confluent via a Kafka stream. Therefore, I have to select the "Data Builder" and there the option "New Replication Flow":

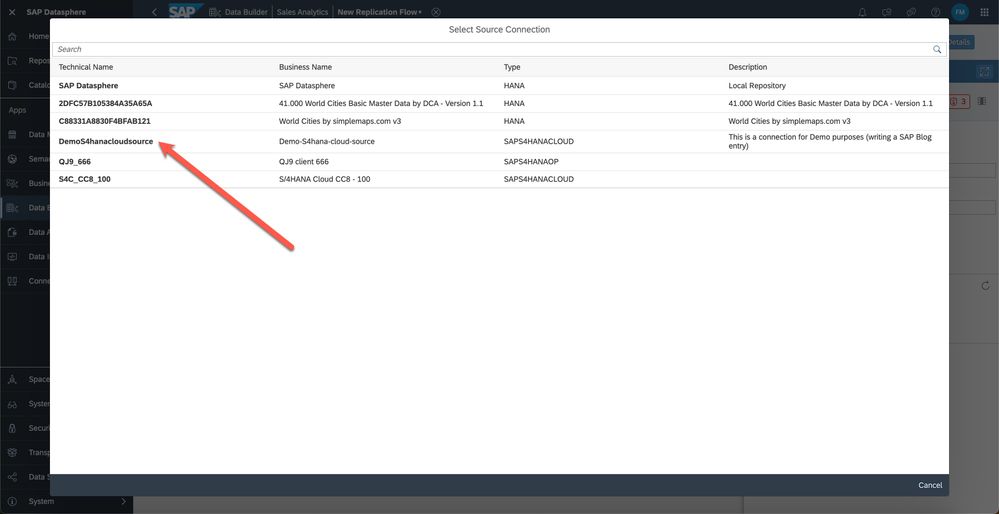

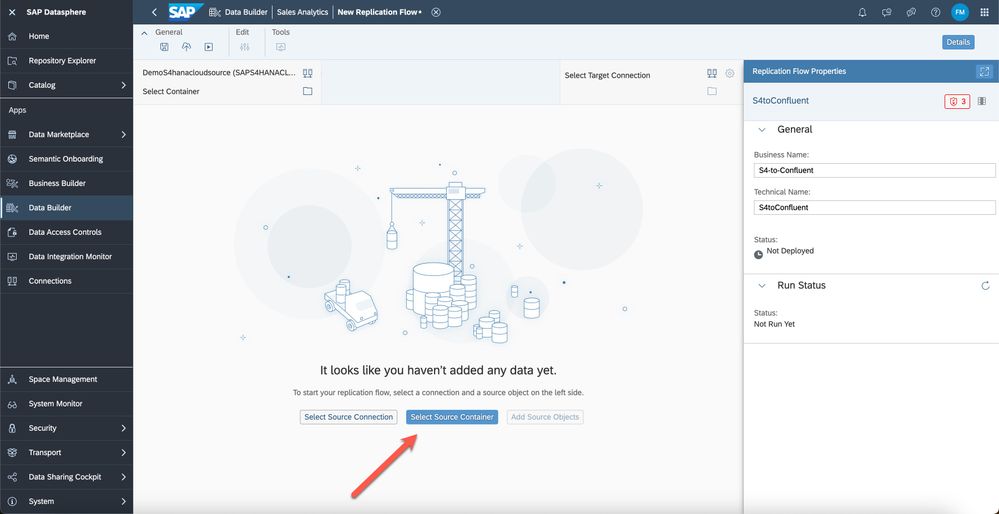

Now I have to select a source (our S/4 HANA connection) and a source container:

In the next step I have to select the source container:

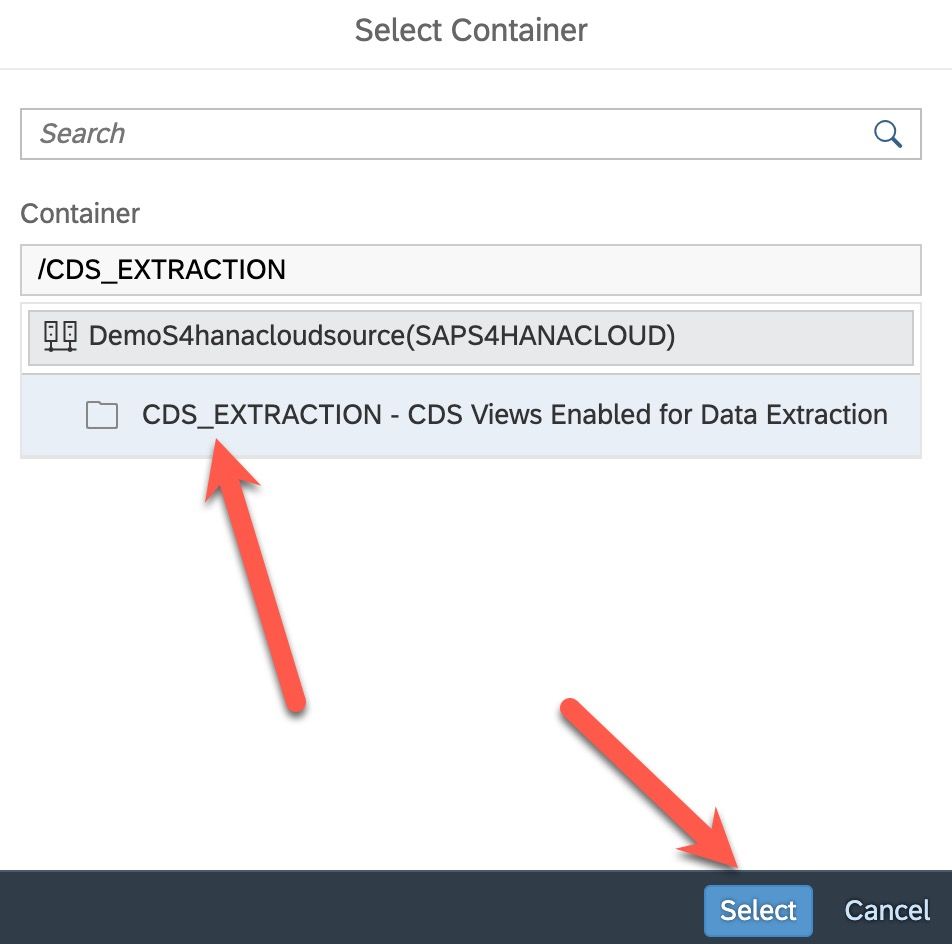

I only have one option, so I'm selecting the "CDS_Extration" and hit the select button:

For the source I need to select the objects I want to replicate at the last step for the source:

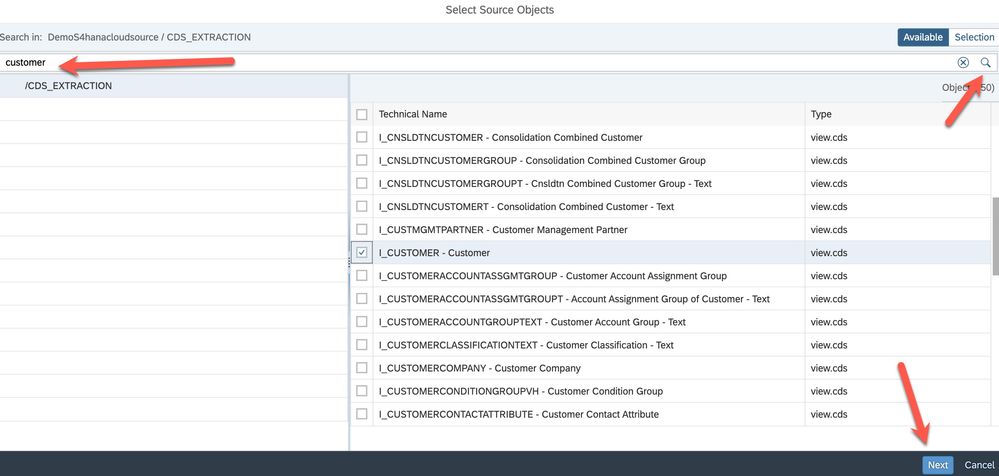

Here I have to search for "customer" because I know that I have data in this CDS_View and that's the data I want to move to Confluent.

If I would have several tables in this CDS_view, I could select the ones I would like to replicate, but in my case, I only have one to choose:

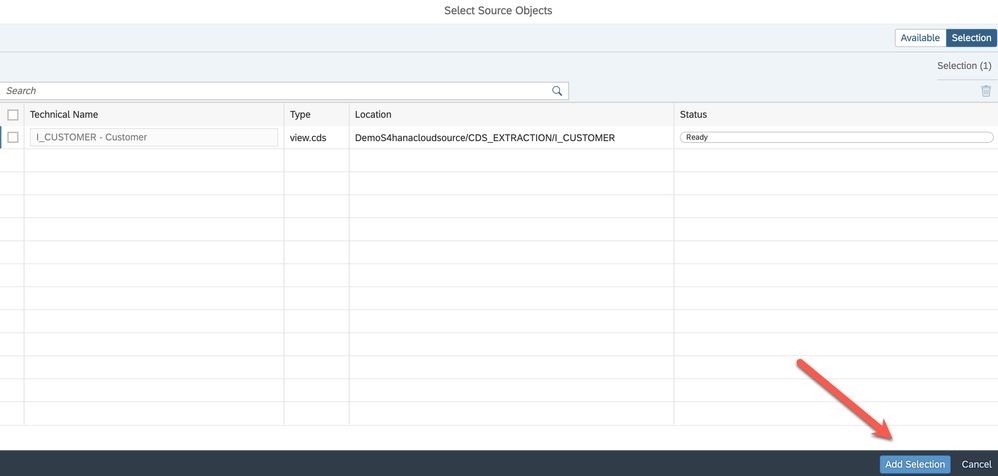

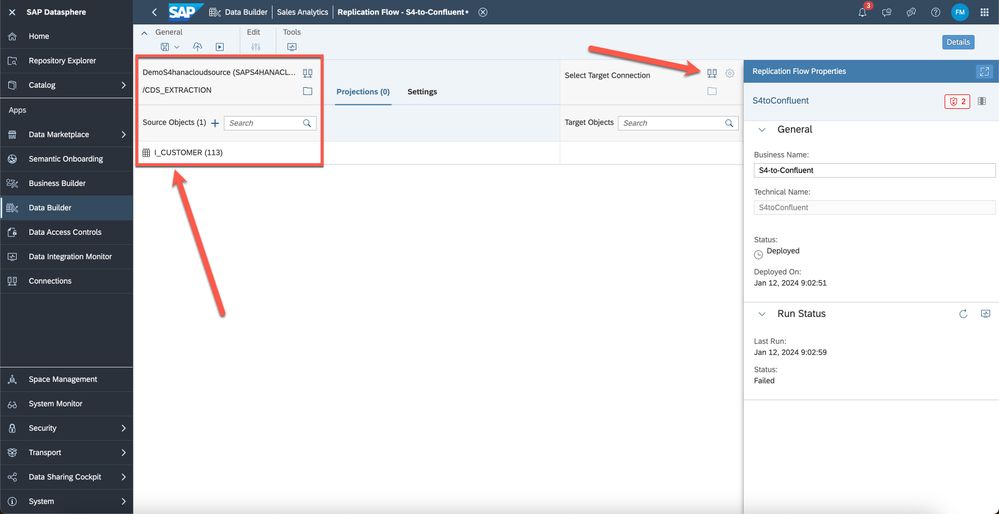

So now I have created the source I want to replicate, and the next step would be to select the target. If you check the screenshot in detail, you will see that the Run Status is "Failed". This is because I have already created the Flow and started it to see whether it is running. It will look different for you when you try it yourself...

Now the target is selected. I didn't select any "topic" in Confluent and I didn't filter the data from the source.

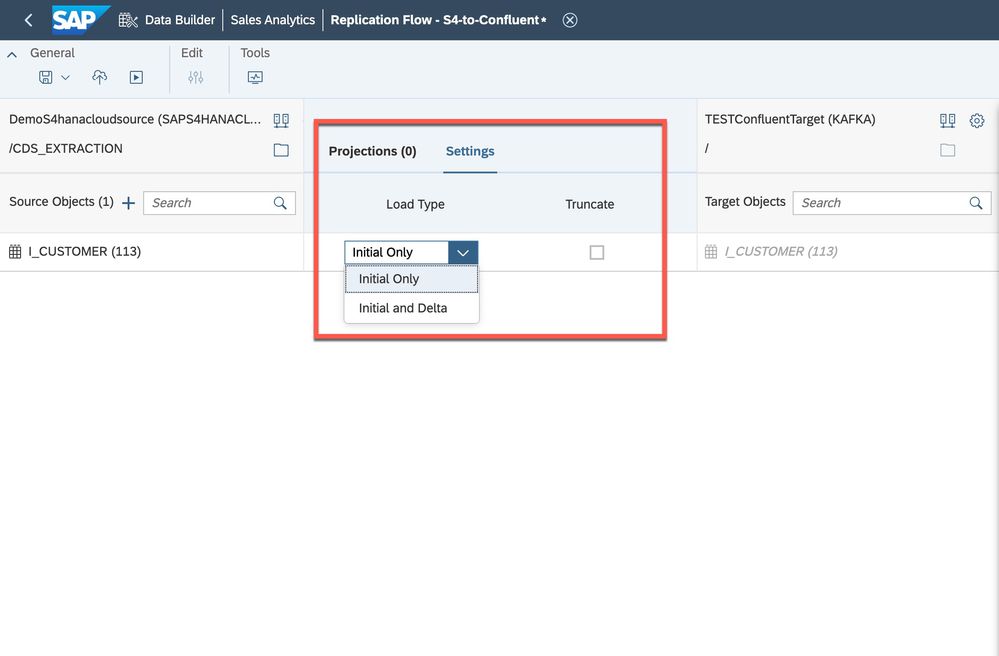

If necessary, I can change some settings:

Here we can select whether the data should be loaded only as initial or initial AND Delta:

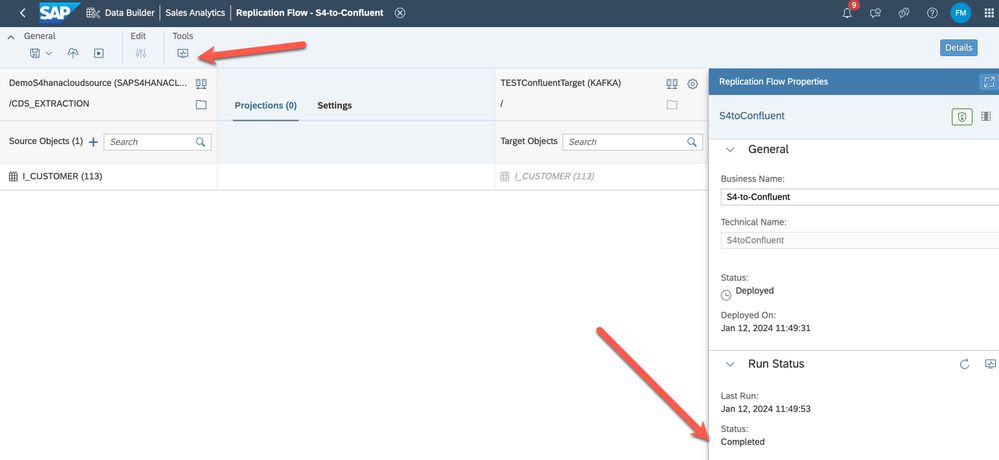

So now we have to do 4 things:

1.) Give a name for the Replication Flow. You can do that at the first step, before you create any source and target...

2.) Save the replication flow

3.) Deploy it and wait for the message that the Deployment is finished

4.) Start and see the Status changing to "Running"

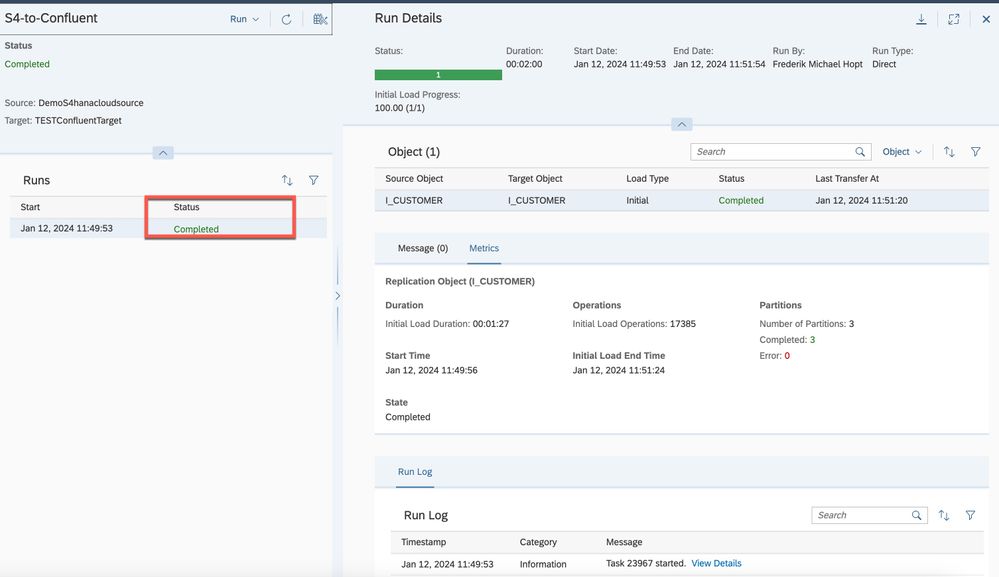

When everything was done correct, you will see the "Run Status" changing to "completed" and you can click on the Monitoring button:

In the Monitoring itself you are able to see any error messages or the completed status with more information:

Last step is to check the data in Confluent Cloud Console:

By clicking on "Messages" in the Topic, you can see the data which was loaded:

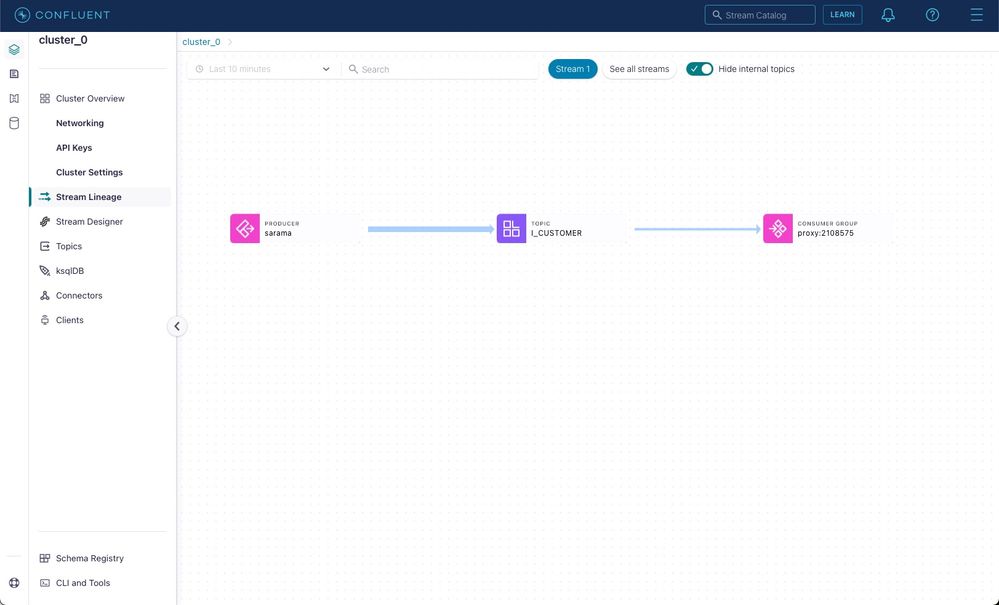

Additionally, you can see the Lineage:

So, everything was done successfully 🙂

Ok to summarize all steps:

- Create an account on Confluent Cloud

- Create a Confluent Cloud Cluster (Basic cluster type will be fine)

- Create an API Key to access the Confluent Cloud Cluster

- Create a Topic in the Confluent Cloud Cluster

- Create a source connection in SAP Datasphere

- Create a target connection in SAP Datasphere

- Create a replication flow in SAP Datasphere

- Save, deploy and start the replication flow in SAP Datasphere

Thanks Perry Kroll for your help and thank you for reading this Blog!

- SAP Managed Tags:

- SAP Datasphere

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

107 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

72 -

Expert

1 -

Expert Insights

177 -

Expert Insights

340 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

384 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,872 -

Technology Updates

472 -

Workload Fluctuations

1

- Importing View/Tables from Datasphere to SAC And create a Model in SAC in Technology Q&A

- SAC Analytic Application: Table calculation in Technology Q&A

- Connecting to an SAP Analytics Cloud tenant to SAP Datasphere Catalog in Technology Q&A

- SAP Datasphere catalog - Harvesting from SAP Datasphere, SAP BW bridge in Technology Blogs by SAP

- Tracking HANA Machine Learning experiments with MLflow: A technical Deep Dive in Technology Blogs by SAP

| User | Count |

|---|---|

| 17 | |

| 14 | |

| 12 | |

| 10 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 6 | |

| 6 |