- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Inspecting and Understanding Resource Consumption ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Cloud Integration provides customers an environment where they can add, adjust and operate integration scenarios according to their individual business needs. This implies that customers have to be aware of the resource consumption of their integration content and the respective resource limits in order to safeguard smooth and reliable message processing. Integration flows can depend on the availability of a number of resources, such as database storage, database connections, JMS resources, memory, CPU, network and more. A bottleneck in any of these resources can potentially impact message processing.

In order to address these requirements we introduced the “Inspect” feature in SAP Cloud Integration. The “Inspect” feature allows you to continuously assess the overall resource situation in your tenant, and reveal the resource consumption by individual integration flows. Both the integration flows that act as top consumers, and any integration flows that are impacted by a resource shortage, can be easily identified. In addition, customers are provided with hands-on guidance via the linked product documentation how to adjust integration flow design to optimize resource consumption.

The “Inspect” feature is currently available as part of SAP Cloud Integration in the Cloud Foundry environment. In the next product increment for the Cloud Integration capability for SAP Integration Suite, this feature will also be enabled.

"Inspect" enables customers to

- Quickly assess the overall resource situation

- Reveal trends in resource usage

- Breakdown of resource usage per integration flow

- Inspect hot spots in resource consumption

- Inspect the impact of resource bottlenecks on message processing

- Fix resource bottlenecks based on concrete guidance

There are many factors that can increase resource consumption, be it temporarily, or in a longer-lasting trend:

- Changes in integration flows increasing the resource footprint

- Onboarding of new integration flows with significant resource footprint

- Increasing throughput

- Huge messages are processed

- Invalid messages (e.g. failing in a mapping step) are received

- Temporary unavailability of external back-ends

- ... and more

"Inspect" helps you to identify and analyse these issues and assists you during efficient mitigation.

The Web UI provides a consistent user experience across all resource types, based on the following structure:

- We provide a landing page, which contains one tile per resource type, each showing a summary of resource consumption, resource limits and criticality. This landing page already enables an assessment of overall resource consumption and criticality.

- An overview chart shows the resource usage trends at tenant level, which can be inspected by clicking the corresponding tile

- At this level, you can drill down into the resource usage per integration flow

The landing page shows one tile per resource, grouped by resource types. In particular, all resources pertaining to databases are grouped under "Database":

Landing page

A resource tile serves two purposes: it enables an assessment of the resource situation, and it enables a drill-down for further inspection. But first let's have a look at the contents of the tile, taking "Data Store" as an example:

Resource tile

Let's now take a tour through the Web UI based on concrete user stories.

First let's have a look at database connections. We define the usage of database connections in terms of a percentage, where we would see 100% usage when all available connections are being utilized.

Looking at the following example, we immediately understand that resource usage is fine, as we are far from approaching the resource limit:

Database connections usage is uncritical

This however does not look as fine:

Database connections usage is critical

Let's inspect why we are seeing this situation by clicking the tile. This will take us to an overview chart showing the trends of

- Usage of database connections

- The number of successful attempts in getting a connection, which is an indicator of how intensively the database is used for message processing

- The number of failed attempts, which may ultimately cause failures in message processing

Critical usage of database connections

In this case we see that, even though usage of database connections is usually quite moderate, there are points in time where the usage reaches a critical level. Therefore, we might want to inspect which integration flows are top consumers of database connections. We can find out by clicking on the red bars and choosing "Inspect Usage". This will take us to an overview of

- The integration flows acting as top resource consumers

- Integration flows that are impacted by unavailability of database connections

The first chart on this screen shows the top-consuming integration flows:

Top-consuming integration flows

The vertical axis shows the names of the integration flows that are top consumers of database connections. The time is displayed on the horizontal axis, and the blue shades indicate the usage of database connections. We see that the integration flow at the top clearly stands out in terms of resource usage, which is close to 100%.

The second chart on this screen reveals which integration flows experienced failed attempts to retrieve a database connection:

Impacted integration flows

In this chart, semantic coloring illustrates the severity. We define severity as the count of failed attempts relative to the count of successful attempts to retrieve a database connection.

In order to inspect the impact on message processing, you can trigger a contextual navigation to the "Monitor Message Processing" Web UI by clicking the respective cell and choosing "Show Messages":

Failed messages because unavailability of database connections

Indeed, the failure indicates unavailability of database connections at that time.

Now that we have looked at the impact, let's see how we could fix the root cause. To this end we return to the chart showing the top consuming integration flow, click the cell with the dark blue shade and select "Show Messages":

Show messages processed by top-consuming integration flow

This again takes us to the"Monitor Message Processing", but this time for the top-consuming integration flow:

Messages processed by the top-consuming integration flow

Firstly we notice that, even though message processing is getting completed successfully, processing a message takes quite a while. Now let's have a look at the integration flow, which we can inspect by choosing "View deployed artifact". This takes us to the integration flow designer, where we can inspect the integration flow:

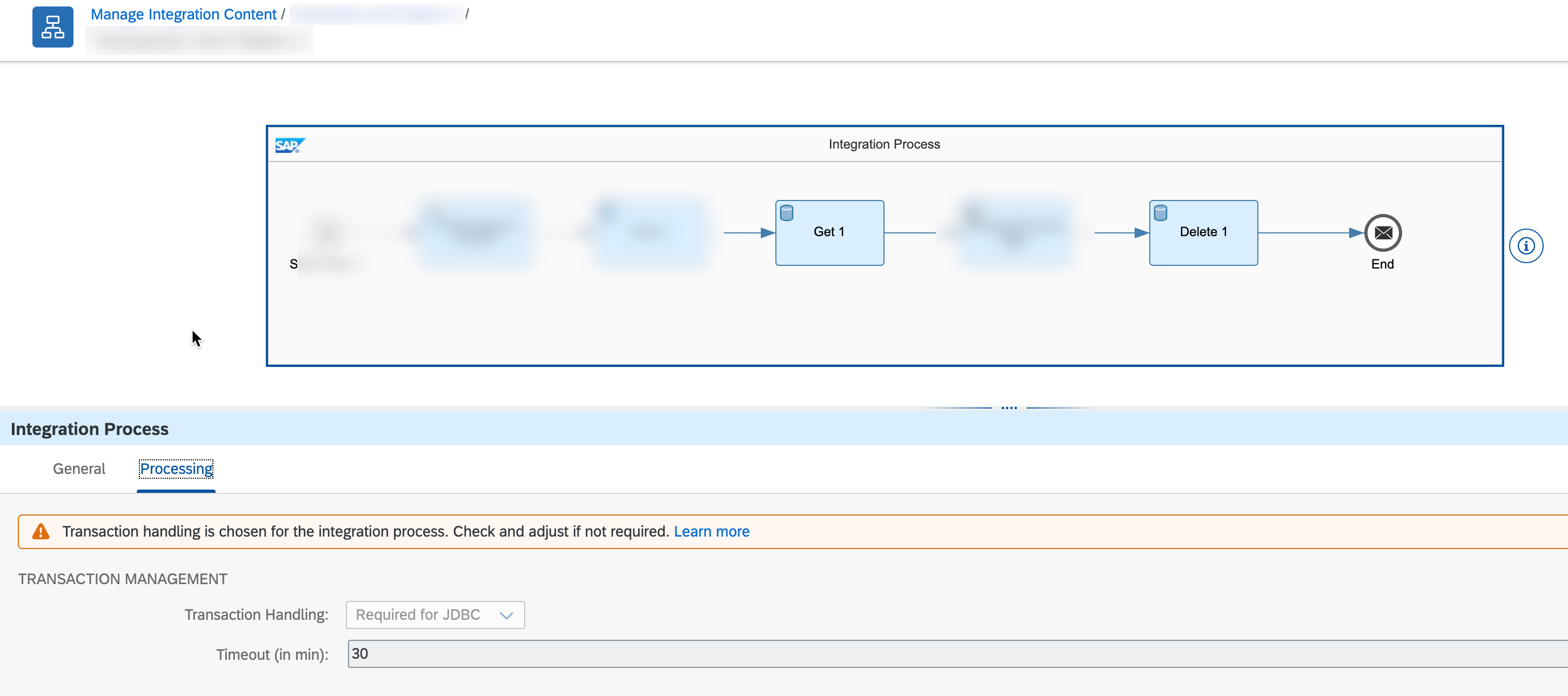

The top-consuming integration flow

We notice that database transactions are being used, as chosen via "Required for JDBC" on the main integration process. As explained in the documentation linked under "Learn more", this means that a database connection is held for each message to ensure that all database operations in the flow are transactional, during the processing of the message, i.e. in this case for around 30 minutes.

This implies that there is a considerable risk of exhausting all available database connections. It is a common pitfall to configure "Required for JDBC" even though transactionality is not required. Therefore, it is worth reviewing the integration flow if this is the case. Let's suppose that we don't require database transactions in this example, so we change the configuration for the "Transaction Handling" setting to "Not Required". The Design Guidelines can help you in understanding when transaction support is required.

After re-deploying the integration flow, we can verify that the problem is gone, i.e. the usage of database connections is back at an uncritical level.

This helped us improve our integration flow, i.e. now will be able to process more messages in parallel without impacting other messages.

Let's now take a look at another case:

Data Store usage is critical

This is also something we should urgently look into to avoid running out of storage space for our data stores. Clicking the tile reveals the usage trends:

Data Store overview

The bars represent the storage consumed by data stores. The share consumed by global data stores is explicitly indicated by the line chart. As we are near the limit of 35000 MB, this limit (or "entitlement") is displayed as well, to give an idea of criticality. Again, we can drill-down by clicking the red bar and choosing "Inspect Usage".

This again reveals charts that show the top consumers of database storage:

Top-consuming Data Stores

There is one chart to show storage consumption for local data stores, and another for global data stores. The difference between local and global data stores lies in their visibility for integration flows.

The data store names are displayed on the vertical axis, and the shades represent the amount of storage consumed. To inspect why we see this huge amount of storage consumption, we can navigate via "Show Data Stores" to inspect details for the selected data store:

Details about the selected Data Store

This reveals that a huge number of entries have accumulated in the data store. We can now inspect the integration flow, either by clicking one of the Message ID links, or based on the integration flow name that is displayed next to the data store name. This reveals the following integration flow design:

Integration flow containing the Data Store

We can easily identify that the data store is used in two flow steps: the first is writing the message to the data store, while the second one retrieves entries for further processing. However, in the second flow step, the option "Delete on Completion" has not been selected (refer the product documentation for an explanation):

Configuration option Delete on completion

This means that entries are removed from that data store only when they expire. Looking again at the first integration flow step, we can see that the expiration period is 90 days:

Large value for Expiration Period

Therefore, it will take a long time until entries are cleaned up, and we can indeed expect a large amount of messages accumulating meanwhile in the data store, unnecessarily consuming storage space.

In order to improve resource consumption, messages should always be consumed from data stores in a timely manner, meaning they should be processed as soon as possible, and deleted thereafter. In the above example, the "Delete on Completion" option should be used.

Also the "Expiration Period" should be adjusted to a smaller value, otherwise messages might accumulate over a long time interval (90 days in the example above). In order to immediately free up the consumed storage, consider deleting the entries if not required anymore (refer the product documentation for instructions).

Let's conclude our tour by looking at the usage of database transactions:

Database transactions

Transactions are consuming resources in the database, in particular, if they are kept open for minutes or even hours. Ideally a database transaction should be completed within milliseconds.

While it's hardly possible to put a hard resource limit to database transactions, we still can assess criticality based on the transaction duration. We assign a warning status when the maximum duration of transactions is between 5 min and 60 min, and a critical status if it exceeds 60 min.

Clicking the tile again reveals the usage trend:

Transactions overview

We see this situation has been around for a while. Let's do "Inspect Usage" to inspect the usage per integration flow:

Top-consuming integration flows

Clicking a cell we can navigate via "Show Max Duration Message" to the particular message processing log that exhibits the maximum transaction duration:

Message processed in the transaction with maximum duration

Now we may inspect the integration flow via "View deployed artifact":

Top-consuming integration flow using transactions

This integration flow is getting a message from data store, applies some processing steps, and eventually deletes it from the data store once the message has been processed successfully. In case of message processing failures, the message shall be retained in data store. In order to ensure this, the Integration Process shape has been configured to use JDBC transactions.

Here we can use the same configuration option as in the above example for data store for the "Get" Data Store Operation:

Delete on completion option in Data Store Operation Get

So we can select the configuration option "Delete On Completion" and replace the option "Required for JDBC" chosen for Transaction Handling above by the option "Not Required".

After re-deploying the integration flow we can verify in "Inspect" that indeed the maximum transaction duration decreased to a minimal duration.

Conclusion

We introduced the "Inspect" feature to help customers assess the resource consumption in their tenants, and to troubleshoot resource-related issues in their integration content. In case you find this helpful, stay tuned for other resource types we will add in further increments

Please also note our contribution to Webinar "What's New in SAP BTP" (starting 20:32).

- SAP Managed Tags:

- SAP Integration Suite,

- Cloud Integration

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

105 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

70 -

Expert

1 -

Expert Insights

177 -

Expert Insights

336 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

378 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,872 -

Technology Updates

468 -

Workload Fluctuations

1

- Digital Twins of an Organization: why worth it and why now in Technology Blogs by SAP

- Embedding Business Context with the SAP HANA Cloud, Vector Engine in Technology Blogs by SAP

- SAP BTP FAQs - Part 1 (General Topics in SAP BTP) in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 7 in Technology Blogs by SAP

- Understanding Data Modeling Tools in SAP in Technology Blogs by SAP

| User | Count |

|---|---|

| 18 | |

| 12 | |

| 10 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |