- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Hey ABAP Cloud please let me save my data export t...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

👉🏿back to blog series or jump to GitHub repos🧑🏽💻 <<part 3 |

Hello and welcome back to your ABAP Cloud with Microsoft integration journey. Part 3 of this series got you covered with modern GraphQL API definition on top of your ABAP Cloud RAP APIs to expose a single API endpoint that may consume many different OData, OpenAPI, or REST endpoints at the same time.

Today will be different. Sparked by a SAP community conversation with dpanzer and lars.hvam including a community question by rammel on working with files with ABAP Cloud, I got inspired to propose a solution for the question below:

Before we dive into my proposal see here a list of alternative options that I came across as food for thought for your own research.

| Mount a file system to a Cloud Foundry app | Create custom API hosted by your CF app and call via http client from ABAP Cloud |

| Connect to SFTP server via SAP Cloud Integration | Design iFlow and call via http client from ABAP Cloud |

| Integrate with SAP Document Management Service | Call SAP BTP REST APIs from ABAP Cloud directly |

| Integrate with SAP BTP Object Store exposing hyperscaler storage services using SDKs | Create custom API hosted by your CF or Kyma app and call via http client from ABAP Cloud |

| Serve directly from ABAP Code via XCO | Base64-encode your file content, wrap into ABAP code, and serve as XCO class. Lars likes it at least 😜. There were sarcastic smiles involved and some more “oh please”, so take it not too seriously. |

| Raise an influencing request at SAP to release something like the former NetWeaver MIME repos | Live the dream |

A common theme among all the options is the need to interact with them from ABAP Cloud via the built-in http client. On the downside some options require an additional app on CF or Kyma to orchestrate the storage interactions.

Ideally ABAP Cloud integrates directly with the storage account to reduce complexity and maintenance.

You guessed rightly my own proposal focusses on direct integration with Azure Blob

To get started with this sample I ran through the SAP developer tutorial “Create Your First ABAP Cloud Console Application” and steps 1-6 of “Call an External API and Parse the Response in SAP BTP ABAP Environment. This way you can easily reproduce from an official reference.

Got your hello world on Eclipse? Great, onwards, and upwards in the stack we go then 🪜. Or down to the engine room – that depends on your perspective.

All the blob storage providers offer various options to authenticate with the service. See the current coverage for Azure here.

Fig.1 Screenshot of supported authentication methods for Azure Storage

The Microsoft Entra ID option offers superior security capabilities compared to access keys – which can be leaked or lost for example – and is therefore recommended by Microsoft.

For developer ease, I left the code using the simpler to configure “Shared-Access-Signature (SAS) tokens” commented on the shared GitHub repos. SAS tokens can be created from the Azure portal with two clicks.

The shared key approach requires a bit of hashing and marshaling on ABAP. Use the ABAP SDK for Azure to accelerate that part of your implementation. Check the “get_sas_token” method for reference.

Anonymous read access would only be ok for less sensitive content like static image files or the likes because anyone can access them once they have the URL.

For an enterprise-grade solution however, you will need to use a more secure protocol like OAuth2 with Microsoft Entra ID

Technically you could do the OAuth2 token fetching with plain http-client requests from ABAP Cloud. See this blog by jacek.wozniczak for instance. However, it is recommended to use the steampunk “Communication Management” to abstract away the configuration from your code. Think “external configuration store”. Also, it reduces the complexity of your ABAP code, because Communication Management handles the OAuth2 flow for you.

| 📢Note: SAP will release the needed capability to maintain OAuth2 scopes in communication arrangements as part of your ABAP Cloud requests with the upcoming SAP BTP, ABAP environment 2402. |

So, till then you will need to use the BTP Destination service. Target destinations living on subaccount level by calling them like so (omitting the i_service_instance_name, thank you thwiegan for calling that out here😞

destination = cl_http_destination_provider=>create_by_cloud_destination(

i_name = |azure-blob|

i_authn_mode = if_a4c_cp_service=>service_specific

).Or call destinations living on Cloud Foundry spaces like so:

destination = cl_http_destination_provider=>create_by_cloud_destination(

i_name = |azure-blob|

i_service_instance_name = |SAP_BTP_DESTINATION|

i_authn_mode = if_a4c_cp_service=>service_specific

).For above Cloud Foundry variation you need to deploy the “standard” communication scenario SAP_COM_0276. My generated arrangement id in this case was “SAP_BTP_DESTINATION”.

Be aware, SAP marked the approach with BTP destinations as deprecated for BTP ABAP. And we can now see why. It will be much nicer doing it from the single initial communication arrangement only, rather than having the overhead with additional services and arrangements. Looking forward to that in February 😎

Not everything is “bad” about using BTP destinations with ABAP Cloud though. They have management APIs, which the communication arrangements don’t have yet. Also, re-use of APIs across your BTP estate beyond the boundary of your ABAP Environment tenant would be useful.

A fully automated solution deployment with the BTP and Azure terraform providers is only possible with the destination service approach as of today.

See this TechEd 2023 session and watch this new sample repos (still in development) for reference.

The application flow is quite simple once the authentication part is figured out

Access your communication management config from your ABAP web Ui:

https://your-steampunk-domain.abap-web.eu20.hana.ondemand.com/ui#Shell-home

Steampunk supports the typical set of authentication flows for outbound communication users using http that you are used to from BTP. I chose the OAuth2 Client Credentials grant because that is most widely referenced in the BTP world and reasonably secure.

Fig.2 ABAP Cloud API flow including OAuth2 token request from Microsoft Entra ID

Since I am integrating with an Azure Storage account, I will need to authenticate via Microsoft Entra ID (formerly known as Azure Active Directory).

Yes, Microsoft likes renaming stuff from time to time, too 😉.

Using the Azure Storage REST API I can create, update, delete, and list files as I please.

The Entra ID setup takes a couple of clicks

Create a new App registration from Microsoft Entra ID service on your Azure portal and generate a new secret. Beware of the expiry date!

Below preferred option will start working once SAP adds the scope parameter for OAuth2 Client Credentials grant as described before.

Fig.3 Screenshot of attribute and secret mapping for ABAP Cloud Outbound user

For now, let’s have a look at a destination on subaccount level instead. Be aware the scope parameter needs to be “https://storage.azure.com/.default” (see fig.4 below, additional properties section called “scope” on the bottom right). That is also the setting that we are missing for the preferred approach mentioned above.

The standard login URLs for OAuth token endpoints on Microsoft Entra ID are the following:

https://login.microsoftonline.com/your-tenantId/oauth2/v2.0/token

https://login.microsoftonline.com/your-tenantId/oauth2/v2.0/authorize

Fig.4 Screenshot of attribute mapping from Entra ID to SAP BTP Destination

So far so good. Let’s roll the integration test from our ABAP console application on Eclipse (ADT).

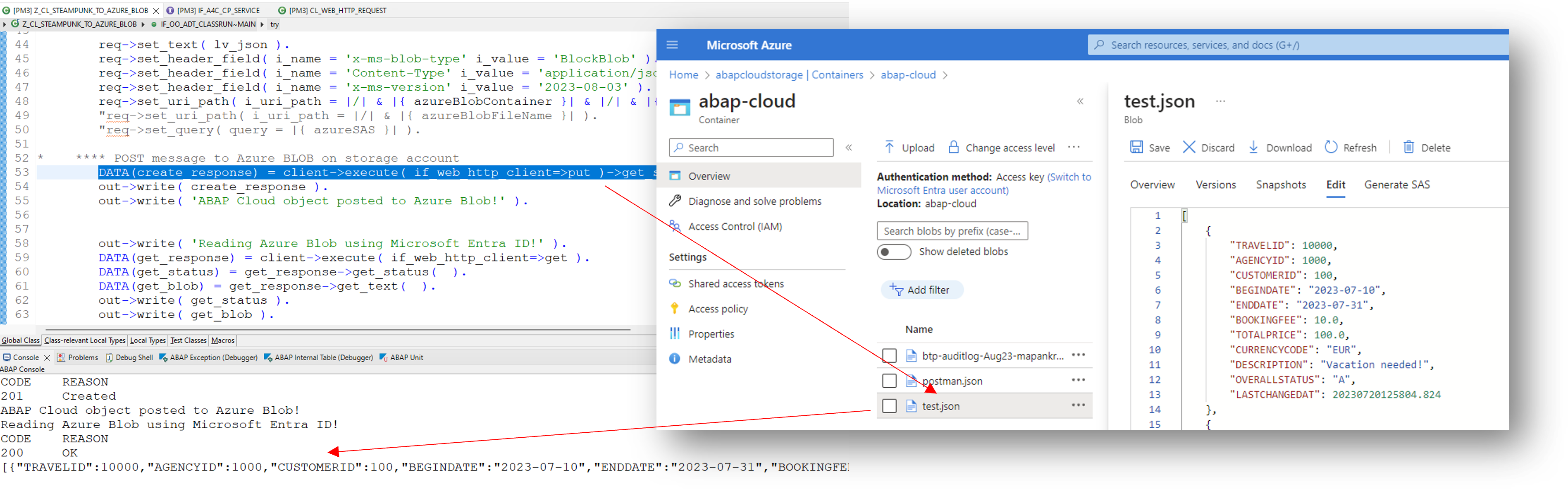

Fig.5 Screenshot of file interaction from ABAP Cloud and data container view on Azure

Excellent, there is our booking request: Safe and sound stored as Azure Blob, posted from ABAP, and read again seamlessly.

See the shared Postman collection to help with your integration testing.

Thoughts on production readiness

The biggest caveat is the regularly required OAuth2 client credential secret rotation. Unfortunately, credential-free options with Azure Managed Identities are not possible, because BTP is hyperscaler-agnostic and does not expose the underlying Azure components to you.

Some of you might say next: let’s use client certificates with “veeery long validity time frames like 2038” to push out the problem beyond so far out someone else will have to deal with it. Well, certificate lifetimes get reduced more and more (TLS certs for instance have a maximum of 13 months at DigiCert since 2020) and you have to rotate them eventually, too 😉. With shorter certificate lifetimes more secure hashing algorithms come into effect much quicker for instance.

I will dedicate a separate post on client certificates (mTLS) with steampunk to consume Azure services.

What about federated identities? You could configure trust between your SAP Cloud Identity Service (or Steampunk auth service) and Microsoft Entra ID to allow requests from ABAP Cloud to authorize Azure services. However, that would be a more complex configuration with implications for your overall setup causing larger integration test needs. And we embarked on this journey to discover a simple solution not too far away from AL11 and the likes, right? 😅

See a working implementation of federated identities with SAP Cloud Identity service consuming Microsoft Graph published by my colleagure mraepple in his blog series here.

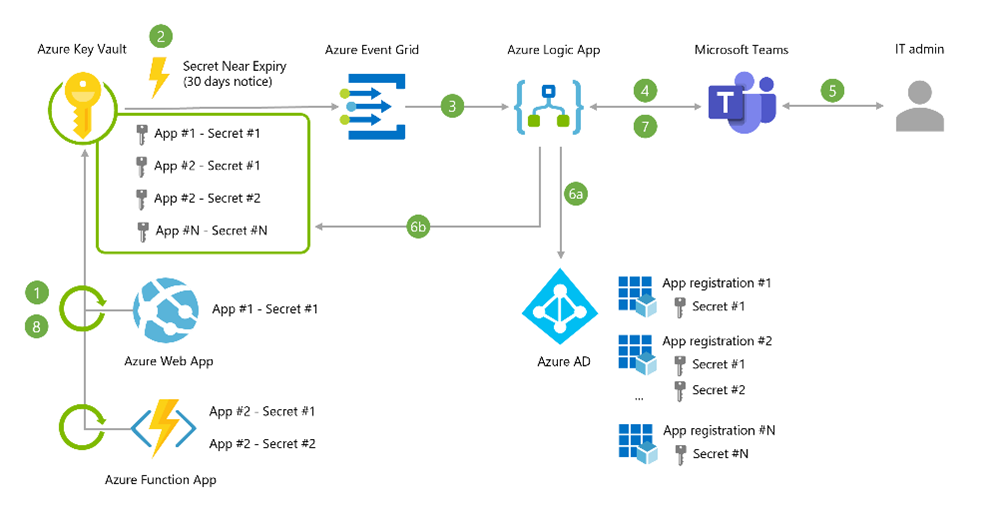

Ok, then let’s compromise and see how we can automatically rotate secrets. Azure Key Vault exposes events for secrets, keys, and certificates to inform downstream services about due expiry. With that a small low code app can be provided to perform the secret update. See below sample that went the extra mile asking the admins via Microsoft Teams if they wanted to perform the change or not:

Fig.6 Architecture of secret rotation with Azure Key Vault and secret refresh approval

A new secret for the app registration on Entra can be generated with the Microsoft Graph API like so. See this post for details on the Azure Key Vault aspects of the mix.

To apply that flow and propagate the new secret to steampunk, we need to call BTP APIs to save the new secret. See the BTP REST API for Destinations here to learn about the secret update method.

Have a look at my earlier blog post for specifics on how to do the same with certificates.

Estimated cost for such a secret rotation solution for 1000 rotations per month is around 2$ per month. With simpler configurations and less rotations, it can be covered by free tiers even.

Once you have applied the means of automation as discussed above you may incorporate this into your DevOps process and live happily ever after with no manual secret handling 😊.

Final Words

That’s a wrap 🌯you saw today how – in the absence of an application server file system and NetWeaver MIME repository (good old days) – you can use Azure Storage Account as your external data store from BTP ABAP Environment (steampunk) using ABAP Cloud. In addition to that, you gained insights into the proper setup for authentication and what flavors are supported by steampunk now. You got a glimpse into automated deployment of the solution with the BTP and Azure terraform provider.

To top it up you learnt what else is needed to operationalize the approach at scale with regular secret/certificate rotation.

Check SAP’s docs for external APIs with steampunk for further official materials.

What do you think dpanzer and lars.hvam? Not too bad, is it? 😉

Find all the resources to replicate this setup on this GitHub repos. Stay tuned for the remaining parts of the steampunk series with Microsoft Integration Scenarios from my overview post.

Cheers

Martin

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

18 -

ABAP API

1 -

ABAP CDS Views

4 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

3 -

ABAP DDIC CDS view

1 -

ABAP Development

5 -

ABAP in Eclipse

3 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

abapGit

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

Advanced formula

1 -

AEM

1 -

AI

8 -

AI Launchpad

1 -

AI Projects

1 -

AIML

10 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytic Models

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

4 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

AS Java

1 -

ASE

1 -

ASR

2 -

Asset Management

2 -

Associations in CDS Views

1 -

ASUG

1 -

Attachments

1 -

Authentication

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

2 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Background job

1 -

Backorder Processing

1 -

Backpropagation

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

Bank Communication Management

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

BI

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

15 -

BTP AI Launchpad

1 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

2 -

Business Data Fabric

3 -

Business Fabric

1 -

Business Partner

13 -

Business Partner Master Data

11 -

Business Technology Platform

2 -

Business Trends

4 -

BW4HANA

1 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

13 -

CDS

2 -

CDS Views

1 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CICD

1 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation

1 -

Consolidation Extension for SAP Analytics Cloud

3 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Corrective Maintenance

1 -

Cosine similarity

1 -

CPI

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Custom Headers

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

5 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

13 -

Data Quality Management

13 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

9 -

Database and Data Management

1 -

database tables

1 -

Databricks

1 -

Dataframe

1 -

Datasphere

3 -

Datasphere Delta

1 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Disaster Recovery

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Emergency Maintenance

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

Enterprise Asset Management

2 -

Entra

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

16 -

Fiori App Extension

2 -

Fiori Elements

2 -

Fiori Launchpad

2 -

Fiori SAPUI5

13 -

first-guidance

1 -

Flask

2 -

FTC

1 -

Full Stack

9 -

Funds Management

1 -

gCTS

1 -

GenAI hub

1 -

General

3 -

Generative AI

1 -

Getting Started

1 -

GitHub

11 -

Google cloud

1 -

Grants Management

1 -

groovy

2 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

Hana Vector Engine

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

9 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

Improvement Maintenance

1 -

Infuse AI

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

Internal Table

1 -

IoT

2 -

Java

1 -

JMS Receiver channel ping issue

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kafka

1 -

Kerberos for ABAP

10 -

Kerberos for JAVA

9 -

KNN

1 -

Launch Wizard

1 -

Learning Content

3 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Live Sessions

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

4 -

Marketing

1 -

Master Data

3 -

Master Data Management

15 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

MLFlow

1 -

Modeling in SAP HANA Cloud

9 -

Monitoring

3 -

MPL

1 -

MTA

1 -

Multi-factor-authentication

1 -

Multi-Record Scenarios

1 -

Multilayer Perceptron

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

Neural Networks

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

Overhead and Operational Maintenance

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

Partner Built Foundation Model

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Plant Maintenance

2 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Practice Systems

1 -

Prettier

1 -

Proactive Maintenance

1 -

Process Automation

2 -

Product Updates

6 -

PSM

1 -

Public Cloud

1 -

Python

5 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

React

1 -

Reactive Maintenance

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

Report Malfunction

1 -

report painter

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

RFID

1 -

Risk

1 -

rolandkramer

2 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

2 -

S4 HANA Cloud

2 -

S4 HANA On-Premise

3 -

S4HANA

6 -

S4HANA Cloud

1 -

S4HANA_OP_2023

2 -

SAC

11 -

SAC PLANNING

10 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

2 -

SAP AI Core

10 -

SAP AI Launchpad

9 -

SAP Analytic Cloud

1 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

5 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics cloud planning

1 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP Application Logging Service

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BO FC migration

1 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BODS migration

1 -

SAP BPC migration

1 -

SAP BTP

25 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

8 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Generative AI

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP BTPEA

1 -

SAP Build

12 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

11 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

9 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HANA PAL

1 -

SAP HANA Vector

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

10 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

2 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

3 -

SAP on Azure

2 -

SAP PAL

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

sap print

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP Router

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

3 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

9 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapfirstguidance

3 -

SAPHANAService

1 -

SAPIQ

2 -

sapmentors

1 -

saponaws

2 -

saprouter

1 -

SAPRouter installation

1 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

9 -

security

10 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

Sender

1 -

service

2 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

9 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

SOAP

2 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

9 -

SSO

9 -

Story2

1 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

Synthetic User Monitoring

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

15 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Testing

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Vectorization

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

Webhook

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- Export SAC table to SFTP server in Technology Q&A

- Export workbench package from eclipse connected with development tenant in Technology Q&A

- First steps to work with SAP Cloud ALM Deployment scenario for SAP ABAP systems (7.40 or higher) in Technology Blogs by SAP

- Not able to do build apps its says FAILED with ERROR: in Technology Q&A

- Importing View/Tables from Datasphere to SAC And create a Model in SAC in Technology Q&A

| User | Count |

|---|---|

| 50 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |