- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Analytics Cloud Activities Log Command-line In...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

01-18-2023

12:10 PM

SAP Analytics Cloud administrators are typically faced with a task to download the activities log for auditing or compliance reasons. This, to date, has been a manual task which is somewhat problematic for a variety of reasons.

Whilst there has been an API to download these logs for a while, it’s not been possible to completely automate the task due to the lack of ‘OAuth client’ support. ‘OAuth client’ support is now available with the latest release, Q1 2023.

It seemed to make sense, for me to create a sample script to download these logs, rather than for each customer to re-invent the thing over and over. So, that’s what I’ve done, and this sample solution is available now.

My solution uses nodejs and Postman. If you’re happy to use nodejs (which is available on the SAP BTP platform too) and the Postman libraries ‘newman’ then this sample solution is ideal.

Command line interface

Files created

Design and error management

I’ve done my very best to remove as many barriers as I can, and it means you can immediately adopt and consume the Activities Log API

My meticulous attention to detail should mean you are probably going to avoid a number of rare errors and you may even resolve some exceptionally rare issues with the manual download too! I hadn’t realised how complicated downloading a bunch of logs really was until I dived into the detail and thought about it for weeks on end!

You’ll find an article below on this solution, but I’ve also added in a load of other related topics. I’ve compiled a list of best practices covering many areas including, the sample script itself, the activities log in general, a bunch of FAQs, and for the developer best practices when using the API.

Administrators just like you would very much value feedback from other customers on this solution. Please find the time to post a comment to this blog if you adopt it and hit the like button! As a suggestion, please use this template:

Your feedback (and likes) will also help me determine if I should carry on investing my time to create such content.

Feel free to follow this blog for updates.

A simple demo of the command-line interface is shown here. There are 12 other command-line argument options available.

For the same solution, but for BTP Audit Logs please refer to this blog

4 main options, each option provides day, week and month variants

Easy to customise

Follow the detailed step-by-step user guide but at a high level:

Not all (older) logs may be downloadable via the API

A:

A:

A:

A:

A:

A:

A:

A:

A:

A:

A:

A:

A:

A:

A:

A:

Overview

Request Parameters

Best Practice to avoid duplicate entries appearing on different pages

Whilst there has been an API to download these logs for a while, it’s not been possible to completely automate the task due to the lack of ‘OAuth client’ support. ‘OAuth client’ support is now available with the latest release, Q1 2023.

It seemed to make sense, for me to create a sample script to download these logs, rather than for each customer to re-invent the thing over and over. So, that’s what I’ve done, and this sample solution is available now.

My solution uses nodejs and Postman. If you’re happy to use nodejs (which is available on the SAP BTP platform too) and the Postman libraries ‘newman’ then this sample solution is ideal.

The benefits of this sample are:

Command line interface

- enabling automation

- removing human effort and error

Files created

- by time period, rather than by size

- which are truly complete without duplicates in different files

- with a consistent time zone of your choice eliminating a missing or duplicate hour

- in a way that enables 3rd party tools to easily read them

Design and error management

- enabling a known result

Adopt and consume

I’ve done my very best to remove as many barriers as I can, and it means you can immediately adopt and consume the Activities Log API

- All the thinking and best practices has been done for you

- No need to develop or write any code

- No need to understand how the API works

- No need to fathom various complexities

- daylight saving time zones, error handling, very rare API errors etc.

- Detailed step-by-step user guide to get it all working within about 2 hours

- Shared best practices and known issues to ensure best possible success

My meticulous attention to detail should mean you are probably going to avoid a number of rare errors and you may even resolve some exceptionally rare issues with the manual download too! I hadn’t realised how complicated downloading a bunch of logs really was until I dived into the detail and thought about it for weeks on end!

You’ll find an article below on this solution, but I’ve also added in a load of other related topics. I’ve compiled a list of best practices covering many areas including, the sample script itself, the activities log in general, a bunch of FAQs, and for the developer best practices when using the API.

Feedback is important

Administrators just like you would very much value feedback from other customers on this solution. Please find the time to post a comment to this blog if you adopt it and hit the like button! As a suggestion, please use this template:

We have this number of activity logs: xx

We use this sample with this number of SAP Analytics Cloud Services (tenants): xx

We use the option(s): ‘all’, ‘lastfullmonth’, etc.

We use time zone hour/mins: xx hours / 0 mins

We use a scheduler to run this sample script: yes/no

We saved this much time using this sample, rather than developing it ourselves: xx weeks/days

We rate this sample out of 10 (1=low, 10=high): xx

Our feedback for improvement is: xxYour feedback (and likes) will also help me determine if I should carry on investing my time to create such content.

Feel free to follow this blog for updates.

Simple demo

A simple demo of the command-line interface is shown here. There are 12 other command-line argument options available.

demo showing option 'lastfullday' (week and month periods also available)

Resources

| Latest article | Version 1.0.1 – May 2023 Microsoft PowerPoint Preview Slides Microsoft PowerPoint Download Slides |

| [Installation and Configuration] user guide | Version 0.8 – January 2023 .pdf Download .pdf Preview |

| Sample Solution (code) | Version 0.8.1 – February 2023 Github (zip download) Change log |

For the same solution, but for BTP Audit Logs please refer to this blog

Content

- Summary of benefits

- Why download activity logs

- Introducing a sample script to download activities log

- Logic of .csv filenames

- Demo

- Command line options overview

- Error management

- Getting a known result

- Time zone support

- All activity logs

- Activity log download performance

- Designed for customisation

- Related recommendations and best practices

- Installation & configuration

- Known issue

- Frequently asked questions

- Q: Can I use SAP Analytics Cloud to query the activities log

- Q: Is there an OData API to the activities log

- Q: Where can I find more details about the activities log

- Q: Is the Activities Log API available for when SAP Analytics Cloud is hosted on the SAP NEO and Clo...

- Q: Is the Activities Log API available for the Enterprise and the BTP Embedded Editions of SAP Analy...

- Q: For the logs written to the .csv files, are they filtered in anyway

- Q: Is there a way to specify which activities should be logged

- Q: Can I use this to download the Data Changes log

- Q: Does the activities log API enable additional functionality compared to the manual options

- Q: How does the contents of the files between manual download compare with this command-line downloa...

- Q: How does security and access rights work with the activity logs

- Q: Is the sample solution secure

- Q: Is the ‘event’ of downloading the logs, via the API, a recordable event in the activities log

- Q: The activities log shows the logs where manually deleted, what time zone does the description ref...

- Q: When using the sample script to download the logs, why is the content of files different after I ...

- Q: Is there a way to disable the daily email notifications, when the activities log becomes full

- For the developer

Summary of benefits⤒

- Command line interface

- enabling automation

- removing human effort and error

- Files created

- by time period, rather than by size

- which are truly complete without duplicates in different files

- with a consistent time zone of your choice eliminating a missing or duplicate hour

- in a way that enables 3rd party tools to easily read them

- Design and error management

- enabling a known result

- Immediately adopt and consume the Activities Log API

- All the thinking and best practices has been done for you

- No need to develop or write any code

- No need to understand how the API works

- No need to fathom various complexities

- daylight saving time zones, error handling, very rare API errors etc.

- Detailed step-by-step user guide to get it all working within about 2 hours

- Shared best practices and known issues to ensure best possible success

Why download activity logs?⤒

- Activity logs are typically required for compliance and auditing needs

- Thus, there’s often a need to download these logs as old logs get automatically purged by SAP

- Managing the logs can be problematic

- Manual effort required to download them

- Download often spans multiple files

- Time zone is the local time zone and changes on daylight savings causing extra complexity to ensure you have a complete set of logs without duplicates in multiple files, and without a missing ‘daylight saving hour’

|  |

- Leads to the following requirements

- Automated download

- Easy way to determine if you have a complete set of logs

- Known result is critical

- Time zone support

- Different organisations have slightly different needs

- Some require daily logs, others weekly or monthly

Introducing a sample script to download activities log⤒

- Sample script to download the Activities Log

- Command line that downloads the logs and generates dynamically named files, based on time (day, week, month)

- Uses SAP Analytics Cloud REST API and OAuth Client (planned to be available from 2023 Q1)

- Sample comprises of:

- JavaScript (.js) file

- Postman collection(for downloading the activities log)

- Postman environment(to define your SAP Analytics Cloud Services, and OAuth client details)

- the .js calls Postman ‘as a library’

- this, in turn, requires nodejs

- Solution is provided ‘as is’ without any official support from SAP

- It is thus a community driven solution

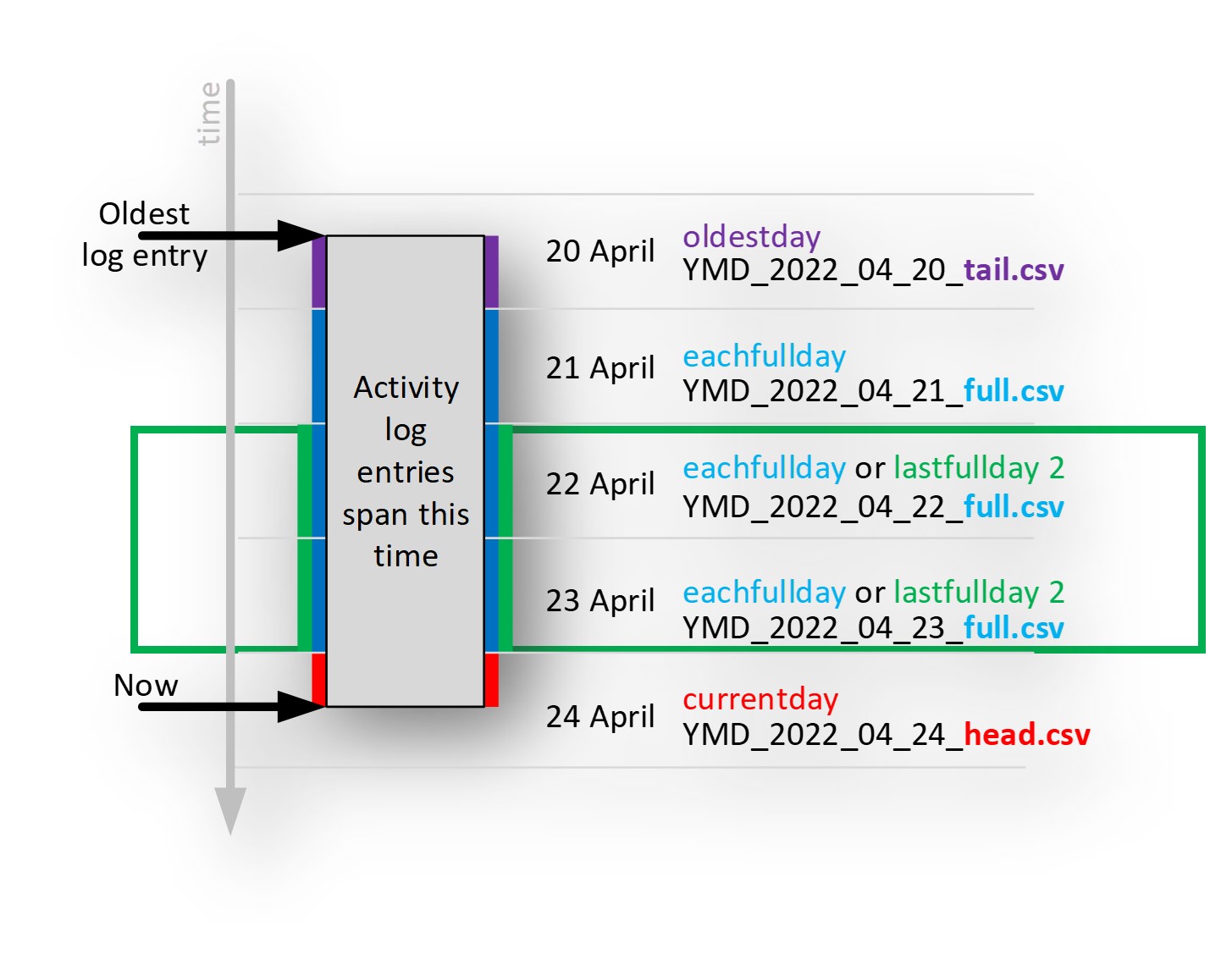

Logic of .csv filenames⤒

- oldestday logs are for the day containing the oldest log entry

- The logs for this day will be incomplete

- Since logs earlier in that day are not present, they have been deleted either manually or automatically by SAP

- Filenames will have _tail.csv

- fullday logs are periods where:

- 1) there is a log entry in the preceding day

- 2) todays day is not the same day as the log entry itself

- Filenames will have _full.csv

- currentday logs must also be incomplete because the day is not yet over

- Filenames will have _head.csv

- This logic applies to periods ‘weeks’ and ‘months’ in a similar way as for the ‘day’

- Only fullday, fullweek and fullmonth logs are ‘complete’ and contain no duplicates

- Files will not be created, if there aren’t any activities for that period

- This means you could have ‘missing’ files, but this is because there are no logs for that period

Demo⤒

Command line options overview⤒

4 main options, each option provides day, week and month variants

- Oldest: oldestday oldestweek oldestmonth

- Creates a single _tail.csv for the oldest (incomplete) period

- Eachfull: eachfullday eachfullweek eachfullmonth

- Creates a file _full.csv for each and every ‘full’ period

- Lastfull: lastfullday lastfullweek lastfullmonth

- Creates a single file _full.csv for the last ‘full’ period

- Ideal to run daily, weekly or monthly

- Current: currentday currentweek currentmonth

- Creates a single file _head.csv for the current (incomplete) period

day

week

month

Error management⤒

- The sample script manages a good number of errors including but not limited to

- session accesstoken timeouts (http 401 status codes)

- x-csrf-token timeouts (http 403 status codes)

- server too busy (http 429 status codes) and will wait and automatically retry until successful

- Script is designed to handle these errors at any point in the workflow providing maximum stability

- Unhandled errors could be the result of

- an error on the part of SAP Analytics Cloud

- OAuth authentication issues

- API availability

- network or connection issues

- Anything unhandled will prevent all logs being written out, meaning failures will not corrupt existing files making it safe to repeatedly attempt to write-out log files previously downloaded

- Errors returned from the API are written to the console helping you to understand the root cause

- The sample script will return an:

- exit code of 0, if there were no unhandled errors. Everything went as expected

- exit code of 1, if there was any unhandled error that occurred during the download of the activity logs

- No .csv log files will be created and means no files will be ‘half written’

- If checking for this exit code, wait 5 to 10 minutes before running the script again, it will give SAP Analytics Cloud time to automatically rectify and recover any issues on its part

Getting a known result⤒

- Extension to the options: lastfullday | lastfullweek | lastfullmonth

- Has an additional option [periods] which then means a file is created for each of the last [periods]

- lastfullday 5

- will create _full.csv files for each of the last 5 full days

- lastfullweek 3

- will create _full.csv files for each of the last 3 full weeks

- lastfullday 5

- Has an additional option [periods] which then means a file is created for each of the last [periods]

- This feature solves the problem where the download failed for any reason

- SAP Analytics Cloud Services in maintenance mode

- SAP Analytics Cloud Services technical issue, API unavailable

- On-premise issue running the sample script

- You have 3 options

- 1) Use this [periods] option

- 2) Or check the exit code and repeat the download until exit code is 0

- 3) Or combine the above two

- If not checking the exit code, use these [periods]:

- Run daily: lastfullday 4

- Run weekly: lastfullweek 3

- Run weekly: lastfullmonth 1

- This way, any failure will be recovered by the next, hopefully successful run

- It would require a significant and repeated failure to miss any logs

day

week

month

Time zone support⤒

- The time zone of the browser, that is used to download the logs, determines the time zone of the logs’ timestamp

- However, the browsers time zone typically changes over a year due to daylight savings

- This dynamic change causes complications easily resulting in

- A missing hour of logs

- A hour of duplicate logs

- Confusing timestamps for logs before the time zone change, but downloaded after the time zone changed

- This sample script resolves these problems by using a fixed time zone of your choice

- It means

- you get a known result, although you need to decide which time zone you want for the entire year

- daylight saving changes have no impact

- all the dynamically created filenames are based off the time zone of your choice

- It doesn’t matter where in the world you run the script, if you maintain the same time zone setting, the script will always create files with the same content

- The sample script can, like the manually download method, use the local (often dynamically changing) time zone but this isn’t recommended for the reasons mentioned

All activity logs⤒

all

- If you don’t want a file per period, then use the ‘all’ option

- This downloads all the logs available and creates a single file

- There is no restriction on the logs downloaded or written out, it is everything

- Filename contains the start and end datetime stamp

- Option (see later) to use a fixed filename

Activity log download performance⤒

- Each .csv file

- Has a header

- Is comma separated

- Unlike the manually downloaded logs, has no empty rows between header and the data

- The API request to download the logs is optimised by filtering on ‘from’ date,thus limiting the download to a certain time period improving the overall performance

- This isn’t applied when using the ‘all’, or ‘oldest’ (day/week/month) options

- However, since the script needs logs in earlier periods to know if another period is ‘full’, logs earlier than the requested period are downloaded. It means a few more days' worth of logs are downloaded than you might expect, and these extra logs are just discarded

- Overall performance (download and writing files) typically takes ~1 second per 3,300 entries

- Making 330,000 log entries 100 seconds (1min 40sec)

- Making 660,000 log entries 200 seconds (3min 20sec)

- 500,000 entries is the default maximum before SAP start to automatically delete them

- See documentation for more details

- The performance is almost identical to the manual download method and >95% of the time is spent waiting for the download of the logs to complete

Designed for customisation⤒

Easy to customise

- Change the default option and default [periods] option

- Saving you the need to provide command line arguments

- Change the folder & base filename to your preference

- If you’d prefer a non-dynamic filename (handy for scheduling jobs that read the file) then enable that here, with the filename of your choice

- Change the friendly name of the SAP Analytics Cloud Service

- This friendly name is used to form the filename

- Duplicate these for each SAP Analytics Cloud Service you have

- Update the ‘environment’, that points to the exported environment.json within the JavaScript accordingly

- Specify the time zone hours and minutes in the environment too

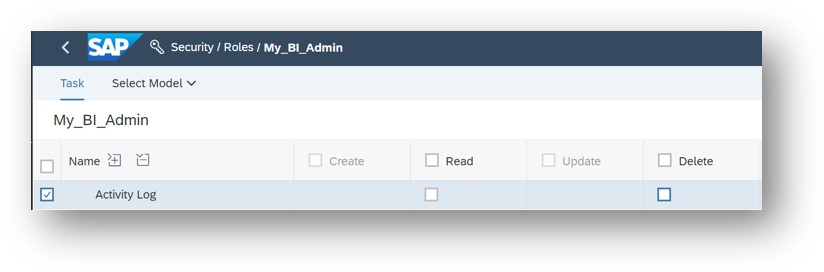

Related recommendations and best practices⤒

![]()

- Use the sample script to download logs on a regular basis

- Validate the sample script is working as expected

- For others to benefit, share your experience and ideas via comments & likes to the blog

- Manage access rights

- An authorized user could delete just 1 days' worth of logs

- For compliance reasons it's not good to allow logs to be deleted in this manner

- To prevent logs being manually deleted revoke the ‘Delete’ right for ‘Activity Log’ for all users, including admins

- Means the ‘_full.csv’ logs are then truly complete and full

- Let SAP automatically delete oldest entries

- Older logs have additional use-cases that may be used by other sample scripts! 😉

- For example, delete or disable dormant users (users without a logon event in the last x days)

- If your logs are automatically deleted too early, less than a year, then request SAP to extend the time to a year (see documentation)

- Older logs have additional use-cases that may be used by other sample scripts! 😉

- OAuth Client management

- Keep the OAuth password top secret and change it on a regular basis (like all other OAuth passwords)

- The OAuth password is stored in the environment file thus restricting access to this file is necessary

- The OAuth access should be restricted to only allow ‘Activities’, so should the account become compromised, then you can still be sure no changes can be made to other areas

Installation & configuration⤒

Follow the detailed step-by-step user guide but at a high level:

- Download & install (30 mins)

- Install nodejs

- Install Postman app

- https://www.postman.com/downloads/

- Postman app is used just to validate the setup, it’s not actually used to download the logs

- Download the sample script

- from Github direct zip download

- Import sample postman collection and environment into Postman app

- Install nodejs

- Configure (30 mins)

- Inside nodejs:

- install newman (Postman libraries) with npm install -g newman

- so the script can create files, link filesystem libraries with npm link newman

- As an admin user, create a new OAuth Client inside SAP Analytics Cloud

- With Postman app:

- configure the Postman environment, including time zone, and validate the OAuth setup is correct

- export the validated Postman environment

- Configure the .js sample script and validate file references

- Inside nodejs:

- Run the sample inside nodejs with node nodejs_4101_download_activities.js (60 mins)

- Investigate and validate various options available

- Check for known issues (see next)

Known issue⤒

Not all (older) logs may be downloadable via the API

- Log entries with massive text values:

- Some activity logs store ‘current’ and ‘previous’ values

- Some of these values had very long text entries (over 3 million characters!) and whilst these logs can be downloaded manually, the text is just too long for the API to download them

- Activity logs now store the delta difference, rather than the actual full value of what’s changed. These changes have taken place during 2022 and some changes may continue into 2023

- This problem is limited to:

- a few events (so far identified) with object types: ‘Role’, ‘Lifecycle’, ‘Model’, ‘Content Network’

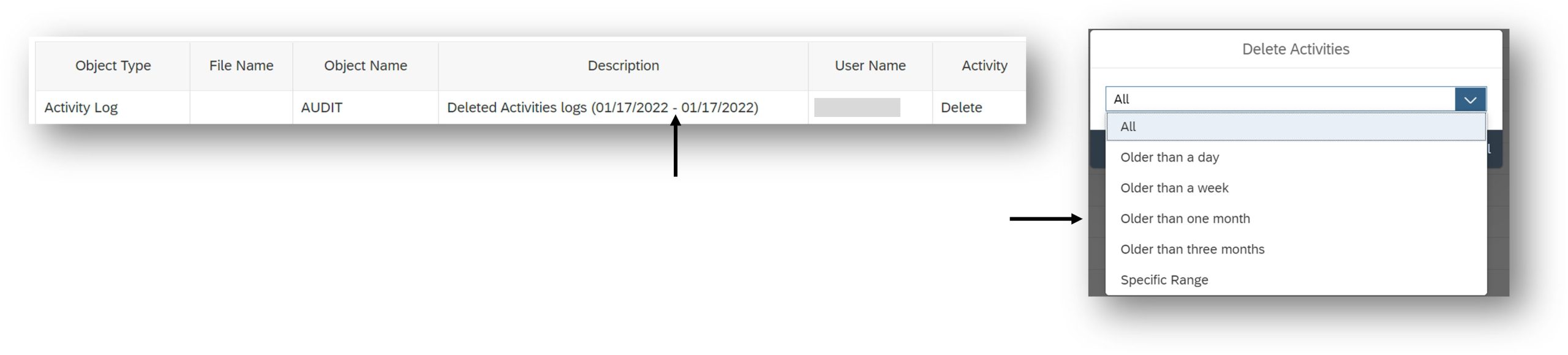

- Workaround:

- Continue to manually download these older logs from 2022 or earlier

- Use this sample script (that uses the API) for logs from 2023 onwards only. For example, use ‘lastfullmonth’ rather than ‘eachfullmonth’

- Option to delete the day(s) related to the problematic logs. Delete them via the user interface using the ‘Specific Range’ option to pick a days’ worth at a time (be really careful and double check the start and end dates before pressing delete!) Then use this sample script without this restriction. Keep as many older logs as possible as they will be helpful for other sample scripts currently in development!

- FAQ:

- The pageSize makes no difference, because the pageSize determines the number of log entries per page. The problem here is that a single log entry is just too long, meaning a pageSize of 1 will also have the problem

- Using the sample script with the ‘all’ option is the quickest way to determine if this issue affects you. If the ‘all’ option works, then you don’t have this issue

- Identify the problematic entry by manually downloading the logs and opening them in Microsoft Excel. Add a column to add the length of current and previous values =len(..)+len(..) and filter on this value for the largest ones. Excel has a limit on the characters per cell, so the number won’t be the true number, but it will help identify which logs are troublesome when you share this with SAP Support

- If you still identify this problem for logs with a timestamp in 2023, please log an incident with SAP Support and, share your discovery via blog comments for others

- The symptoms of the problem, using this sample script are shown, via the console output in 1 of 2 ways

1) Graceful exit:

Unable to proceed. A failure occurred whilst running this sample script

The following errors occurred whilst trying to download activity logs from SAP Analytics Cloud:

[0] All Activities Log test: Too many continuous errors. Aborting. Response: {"status":400,"message":"HTTP 400 Bad Request"}

If the error appears to be with the API, then please either try again later, or log an incident with SAP Support

2) Crash:

#

# Fatal error in , line 0

# Fatal JavaScript invalid size error 169220804

#

#

#

#FailureMessage Object: 0000007FDDBFC210

1: 00007FF77E779E7F node_api_throw_syntax_error+175967

2: 00007FF77E69036F v8::CTypeInfoBuilder<void>::Build+11999

3: 00007FF77F4FD182 V8_Fatal+162

4: 00007FF77F03A265 v8::internal::FactoryBase<v8::internal::Factory>::NewFixedArray+101

5: 00007FF77EEDE7CE v8::Context::GetIsolate+16478

6: 00007FF77ED2AA40 v8::internal::CompilationCache::IsEnabledScriptAndEval+25952

7: 00007FF77F2378B1 v8::internal::SetupIsolateDelegate::SetupHeap+558193

8: 00007FF6FF4E6DA9

Visit the user guide for more troubleshooting advice

Frequently asked questions⤒

Q: Can I use SAP Analytics Cloud to query the activities log⤒

A:

- Yes. You can use the ‘Administration Cockpit’ to query these logs along with many other metadata data sources. There is no need to download the logs to do this

- Refer to the official documentation or blog post for more details

Q: Is there an OData API to the activities log⤒

A:

- No. The sample script uses a REST API to download the logs, there is no OData API that SAP Analytics Cloud or Data Warehouse Cloud can consume

- You could upload/acquire the data though, via the SAP Analytics Cloud Agent for example.If so, edit the .js file and set use_fixed_filename=true so the Cloud Agent can pick the same filename each time. Loading the data back into SAP Analytics Cloud would typically only be necessary to maintain the logs longer than the time SAP automatically deletes them

Q: Where can I find more details about the activities log⤒

A:

- The official documentation provides more information about the rights/roles required to access or delete these logs, how to delete or manually download the logs and much more

- The API documentation also provides more details about the API including how to use the request body to apply filters for example

Q: Is the Activities Log API available for when SAP Analytics Cloud is hosted on the SAP NEO and Cloud Foundry platforms⤒

A:

- Yes, and this sample script works with both

Q: Is the Activities Log API available for the Enterprise and the BTP Embedded Editions of SAP Analytics Cloud⤒

A:

- No, and the API and this sample script works with only with the Enterprise Edition of SAP Analytics Cloud

- The ‘full’ OEM embedded edition of SAP Analytics Cloud doesn’t make available any APIs because those instances of SAP Analytics Cloud are fully managed by SAP. It means you can not access this API with the ‘full’ OEM embedded edition, such as the one with SuccessFactors, etc.

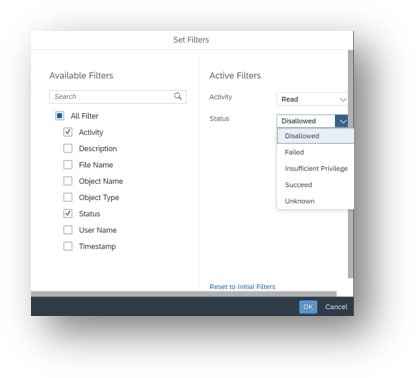

Q: For the logs written to the .csv files, are they filtered in anyway⤒

A:

- No. The activity logs are not filtered in anyway, except by time

- This is unlike the user interface, where the logs can be optionally filtered before being downloaded (see screenshot)

- Additionally, the logs have the same information as the ones manually downloaded, they are no addition properties or information just because they are downloaded via the API

Q: Is there a way to specify which activities should be logged⤒

A:

- No. SAP decides which activities will be captured in the activity log

- The easiest way to determine if an activity it logged or not, is to perform the activity and inspect the log

Q: Can I use this to download the Data Changes log⤒

A:

- No. The Data Changes log is not available through the API

- Unlike the Activities log, the Data Changes log is only available via a manual download

Q: Does the activities log API enable additional functionality compared to the manual options⤒

A:

- No. For example, the options to filter are available through both the manual and API methods and both have the same restrictions. If you’d like to define a complex filter to say filter the ‘Status’ is ‘Disallowed’ or ‘Failed’ then you can’t! You must filter on only one option at a time. It often makes sense to download them and filter afterwards

- The API always returns the timestamp in the UTC (or GMT) time zone, whereas the browser interface always shows the timestamp in the same time zone as the browser setting. This sample script uses the API and changes the timestamp for each entry to the time zone of your choice. The setting for the time zone is within the Postman Environment. See the user guide for more details

Q: How does the contents of the files between manual download compare with this command-line download⤒

A:

- The contents of the file are almost identical

- For example, both have the ‘Previous Value’ and ‘Current Value’ columns (same as the user interface)

Both surround each column with (“) quotes and separate them with a comma (,)

- For example, both have the ‘Previous Value’ and ‘Current Value’ columns (same as the user interface)

- However, the .csv files created by this sample script are different when compared to the manually downloaded option:

- They have a single header row (without any empty rows as with the manual option)

- Splits the files into multiple files based on time (rather than by volume as with the manual option)

- However, the ‘all’ option won’t even split the file by time, it will be one file and won’t be split at all regardless of its volume

- Any comma’s (,), sometimes found in filename or description columns, are replaced with a semicolon (;)

- The API performs this change

- Any carriage returns, sometimes found in description column, are replaced with a space character

- The removal of carriage returns from the file, may make it easier for 3rd party tools to read the contents and this is performed by the sample script

- The order of the activities are always sorted by ascending time, with the oldest entry listed first and the latest entry listed last

- The API actually allow you to change the order, but the logic of the script demands the order is ascending so the script forces the order to be ascending

- The timestamp used is a fixed time zone of your choice

- It means the manually downloaded option has:

- A ‘header’ that spans multiple rows in each file and some of those rows are blank

- Splits the files by volume of entries (100,000 per file)

- May contain carriage returns and commas within the data fields

- The order of the activities listed are defined in the user interface

- The timestamp used is a dynamic time zone based on your browser setting that may change over time due to daylight savings

Q: How does security and access rights work with the activity logs⤒

A:

- The activities log can be access in 2 ways, either using the User Interface, or via the API

Both ways require different access rights to be granted - When using the User Interface the user needs to inherit, from the role, the ‘Read’ right for ‘Activity Log’ and optionally the ‘Delete’ right

- When using the API, the user is an ‘OAuth client’ user, like a system user, and requires the ‘Activities’ access

- The API is read-only, unlike the User Interface that also provides the option to delete logs

- It means the API has no means to delete the activity logs

Q: Is the sample solution secure⤒

A:

- The activities are downloaded over a secure HTTPS connection

- Once the .csv files are generated, it is for your organisation to secure them. The files contain personal data and this requires extra care to ensure compliance with local data privacy laws

- The OAuth client id and secret (username/password) are stored as clear text in the environment .json file and so access to this file should be restricted

- The access granted to the OAuth client should be limited to ‘activities’ to ensure any compromised details limit the impact to access only to the activities log and no other administration tasks

Q: Is the ‘event’ of downloading the logs, via the API, a recordable event in the activities log⤒

A:

- Yes, just like it is with the manually downloaded option an entry is made in the activities log for each API request that downloads the logs

- This means that using this sample script will always add at least 1 activity log entry each time it is run

Q: The activities log shows the logs where manually deleted, what time zone does the description refer to⤒

A:

- When deleting the logs, the time zone used is inherited from the web browser that is performing the action

- It means the actual time zone of the logs deleted isn’t recorded but it will be the time zone of the browser that was used to delete them

- Remember the best practice is to disable the ability to delete these logs to ensure the logs are always complete

Q: When using the sample script to download the logs why is the content of files different after I change the time zone setting⤒

A:

- Because entries in the logs will fall into different periods (days, weeks, months) depending upon the time zone of your choice. As you change the time zone setting, some of the log entries will fall into different periods of time and this will change the contents of the files created

- It makes no difference to where you run the script, the local time zone is ignored, only the time zone defined in the Postman Environment is used (see the user guide for more details)

Q: Is there a way to disable the daily email notifications, when the activities log becomes full⤒

A:

- No. Please vote on the influence portal idea to help SAP prioritise this feature

https://influence.sap.com/sap/ino/#/idea/285466

- The internal SAP reference is FPA00-51933

- A possible workaround is to use your own email server, which would then enable you to prevent the forwarding of these emails. Customers that use their own email server have found that their users prefer it, as it makes the origin of the emails more obvious. Related documentation

For the developer⤒

How the sample script works⤒

Overview

- 1

- The Postman environment is loaded into the JavaScript node.js container

- The Postman collection is then called as a library function passing into it an optional filter on time ‘from’, to limit the volume of logs. The filter is passed within the request body to the SAP API. This reduces the number of logs and pages that need to be returned

- 2

- The Postman collection makes requests to get an accesstoken and the x-csrf-token (needed for the next POST request)

- Then the logs are downloaded, page by page, until they are all downloaded

- 3

- After each page request, the data stream response is processed removing carriage returns and changing the timestamp to the desired time zone

- 4

- Once all the data has been downloaded successfully, the csv file(s) are created by splitting the logs based upon the desired period (day, week, month)

- The success or failure is written to the console (errors can be pipped) and the exit code is set

- The Postman Collection manages all sessions, timeouts and most errors resolving and recovering occasional ‘wobbles’ when using the API. This improves overall stability and reliability. Unrecoverable errors are returned to the JavaScript via the ‘console’ event and then passed back to the main console allowing you to see any underlying unhandled API errors

Best practices to avoid duplicates⤒

Request Parameters

- pageSize

- Optional and can be set from 1 to 100000

If not specified, it uses 100000 - Pages of logs are returned, and the response header epm-page-count holds the total number of pages

- Optional and can be set from 1 to 100000

- pageIndex

- To obtain a particular page use request header pageIndex

- The activity of requesting a page is an activity itself,

this actually adds an entry to the log its downloading

- sortKey and sortDecending

- The order of the logs

- The sample script uses the default pageSize

- Reducing the pageSize just increases the number of requests needed and reduces the overall throughput, it means there’s really no point in changing it

- pageSize=1 is helpful though, to get just 1 record back, for example when you’d like to know the timestamp of the oldest log entry and you’re not interested in any other logs

Best Practice to avoid duplicate entries appearing on different pages

- If the sortDecending is true on Timestamp, then there’s a problem!

- In the example, we see Page1 is ok, but the act of requesting a log, adds a log to Page1 by the time the request for Page2 is made. Page2 has grown by 1

- This means Page2 has an entry that was previously on Page1 resulting in a duplicate that we don’t want

- So, always set sortKey=Timestamp and sortDecending=false if you could get back more than 1 page to avoid duplicated log entries

- A typical SAP Analytics Cloud Service could have in excess of 500,000 logs over a year

- SAP Managed Tags:

- SAP Analytics Cloud

Labels:

33 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

132 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,399 -

Event Information

95 -

Expert

1 -

Expert Insights

177 -

Expert Insights

431 -

General

2 -

Getting Started

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

18 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,571 -

Product Updates

481 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,868 -

Technology Updates

562 -

Workload Fluctuations

1

Related Content

- Running Your Own Blockchain on The SAP BTP Kyma Trial: A Hands On How To Guide 🚀 in Technology Blogs by Members

- SAP Fiori for SAP S/4HANA - Finding business process improvements with SAP Signavio in Technology Blogs by SAP

- Setup Synthetic user Monitoring in SAP Cloud ALM with On-Prem Runner in Technology Blogs by Members

- How to test a Windows Failover cluster? in Technology Blogs by SAP

- Integration of a SAP MaxDB into CCMS of an SAP System : Part 1 in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 21 | |

| 13 | |

| 11 | |

| 10 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 7 |