- SAP Community

- Groups

- Activity Groups

- SAP CodeJam

- Blog Posts

- Scaling WebSockets Challenges

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

What the article is about

Scaling WebSockets for high concurrency poses unique challenges. This analysis explores these challenges, discusses vertical and horizontal scaling limitations, and introduces an optimization strategy by:

- Removing buffers

- Zero copy upgrade

- Netpoll

These optimizations reduce memory usage more than 10x times, making it cost-effective for large-scale WebSocket connections.

Scaling WebSockets The Right Way

Real-time interactions are at the heart of the modern web. And delivering them to small audiences is relatively easy, thanks to protocols such as WebSockets. There are 2 main types of communication:

Unidirectional Communication:

- Live sports updates

- Real-time dashboards, streams

- Location tracking...

Bidirectional Communication:

- Chat

- Virtual events, and virtual classrooms

- Polls and quizzes...

But there is a challenge...

...and it is scaling your solution to handle Tens of Thousands of users/connections and more.

Challenges in Scaling WebSockets vs. HTTP

Scaling WebSockets is notably more complex than scaling HTTP due to their fundamentally different natures:

HTTP is stateless and straightforward. Each request is self-contained, making it easy to distribute across multiple servers using load balancers. HTTP serves the same data to every client and promptly forgets about them.

WebSockets, on the other hand, are stateful and persistent. Scaling them involves two main challenges:

Maintaining Persistent Connections: WebSockets rely on persistent connections between servers and potentially a vast number of clients. This requires careful management and resource allocation.

Data Synchronization: State often needs to be shared between clients/servers, creating a complex synchronization challenge. For instance, in a chat application, messages must be shared among all participants.

Horizontal vs Vertical Scaling

Vertical Scaling: Increasing the capacity of a single server by upgrading its hardware components like CPU, RAM, or storage.

Horizontal Scaling: Expanding a system's capacity by adding more servers or nodes to distribute the workload, commonly used in cloud computing for scalability.

But why are WebSockets hard to scale?

The main challenge is that connections to your WebSocket server need to be persistent. And even once you’ve scaled out your server nodes both vertically and horizontally, you also need to provide a solution for sharing data between the nodes.

Vertical Scaling Problems

Vertical scaling has its pros and cons, as we’ve seen. But is there anything specific to WebSockets that makes vertical scaling a poor choice?

OS Limitations - File descriptors - Every TCP connection uses a file descriptor. Operating systems limit the number of file descriptors that can be opened at once, and each running process may also have a limit. When you have a large number of open TCP connections, you may run into these limits, that can cause new connections to be rejected or other issues.

Higher possibility for downtime - Unless you have a backup server that can handle operations and requests, you will need some considerable downtime to upgrade your machine.

Single point of failure - Having all your operations on a single server increases the risk of losing all your data if a hardware or software failure was to occur.

Resource limitations - There is a limitation to how much you can upgrade a machine. Every machine has its threshold for RAM, storage, and processing power. If you have just one WebSocket server, there’s a finite amount of resources you can add to it, which limits the number of concurrent connections your system can handle. Tech stack might not be able to handle ever more threads and RAM. Take NodeJS, for example. A single instance of the V8 JavaScript interpreter eventually hits an upper bound on how much RAM it can use. Not only that but NodeJS is single threaded, meaning that a single NodeJS process won’t take advantage of ever more CPU cores.

Scalability Costs - Vertical scaling can become expensive as you invest in high-end hardware. The costs may not be justified, especially when horizontal scaling provides a more cost-effective and fault-tolerant solution.

Resource Underutilization - Scaling vertically often results in underutilized resources during periods of low traffic. This inefficiency can be mitigated with horizontal scaling, which dynamically allocates resources as needed.

Horizontal Scaling Problems

Most of the time horizontal scaling is very good if you want to manage the resources efficiently. Either start more instances of load comes up, or decrease instances in more quite situation in order to save money, but with websockets we have more integral problems.

Data Synchronization - When WebSocket connections rely on shared data or state (e.g., chat messages), ensuring data consistency across multiple servers can be complicated. Proper synchronization mechanisms are necessary. Could be Pub/Sub solution like GCP Pub/Sub but it cost money of course...

Session Management - Managing user sessions across multiple servers can be complex. Users may connect to different servers at different times, requiring a centralized session management solution.

Data integrity - (guaranteed ordering and exactly-once delivery) is crucial for some use cases. Once a WebSocket connection is re-established, the data stream must resume precisely where it left off.

Reconnection - You could implement a script to reconnect clients automatically. However, suppose reconnection attempts to occur immediately after the connection closes. If the server does not have enough capacity, it can put your system under more pressure and lead to cascading failures.

Load balancing strategy decisions - Sticky sessions - A sticky session is a load-balancing strategy where each user is “sticky” to a specific server. For example, if a user connects to server A, they will always connect to server A, even if another server has less load. - Unbalanced servers (if a lot of users disconnect from some of the servers and only one is left busy)

Let's try to analyse and improve

Scaling WebSocket applications is complex, but instead of solely relying on vertical or horizontal scaling, let's explore coding techniques to enhance performance and efficiency within the existing infrastructure.

You can test with whatever number of client connections you want, the results in the improvement will be the same. I will test with 16 000 but will make statistics also for 1 000 000

How we can improve the performance with our own analyses skills?

We are going to use

go tool pprofIdiomatic Way

- Using

net/httppackage as well as most used/renowned but lately deprecated gorrila/websocket - Using goroutine per websocket connection

package main

import (

"github.com/gorilla/websocket"

"log"

"net/http"

_ "net/http/pprof"

"sync/atomic"

"syscall"

)

var count int64

func ws(w http.ResponseWriter, r *http.Request) {

// Upgrade connection

upgrader := websocket.Upgrader{}

conn, err := upgrader.Upgrade(w, r, nil)

if err != nil {

return

}

n := atomic.AddInt64(&count, 1)

if n%100 == 0 {

log.Printf("Total number of connections: %v", n)

}

defer func() {

n := atomic.AddInt64(&count, -1)

if n%100 == 0 {

log.Printf("Total number of connections: %v", n)

}

conn.Close()

}()

// Read messages from socket

for {

_, msg, err := conn.ReadMessage()

if err != nil {

return

}

log.Printf("msg: %s", string(msg))

}

}

func main() {

// Increase resources limitations

var rLimit syscall.Rlimit

if err := syscall.Getrlimit(syscall.RLIMIT_NOFILE, &rLimit); err != nil {

panic(err)

}

rLimit.Cur = rLimit.Max

if err := syscall.Setrlimit(syscall.RLIMIT_NOFILE, &rLimit); err != nil {

panic(err)

}

// Enable pprof hooks

go func() {

if err := http.ListenAndServe("localhost:6060", nil); err != nil {

log.Fatalf("Pprof failed: %v", err)

}

}()

// every connection is on different goroutine as http works like that

http.HandleFunc("/", ws)

if err := http.ListenAndServe("localhost:9080", nil); err != nil {

log.Fatal(err)

}

}Results

Taking up to 300MB RAM for 16 000 connections

Gorilla using net/http

Goroutines for every connection. Each goroutine requires its own memory stack that may have an initial size of 2 to 8 KB depending on the operating system and Go version. We have 8GB only for goroutines at max even they have just started working and most of them could be waiting for signal or message.

Useless buffers from net/http and from hijacking request taking up to 240 mb

- There is dedicated NewReader and NewWriter for every connection. Both of them takes around 4KB which leads to 8KB for only an abstraction per connection.

- There is Hijack which is responsible for upgrading the connection from HTTP to Websocket which dedicates another NewWriter which will result of another 4KB

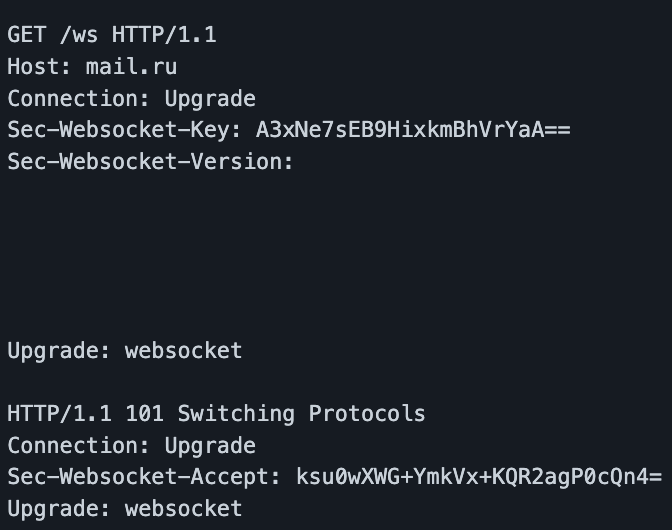

If you don’t know how WebSocket works, it should be mentioned that the client switches to the WebSocket protocol by means of a special HTTP mechanism called Upgrade. After the successful processing of an Upgrade request, the server and the client use the TCP connection to exchange binary WebSocket frames.

Equation

Can we make an equation about the analyses

Memory = conn * (goroutinesMem + net/http buffers + gorilla/websocket buffers)

Memory = conn * (goroutinesMem + 2*NewReader + NewWriter)

Memory = conn * (8KB + 8KB + 4KB)

Memory = 16000 * (8KB + 8KB + 4KB) = 320000KB = 320MB

What we have almost the same value as the test shown - 320MB. There will be always some difference due to other structs which are fixed or not important

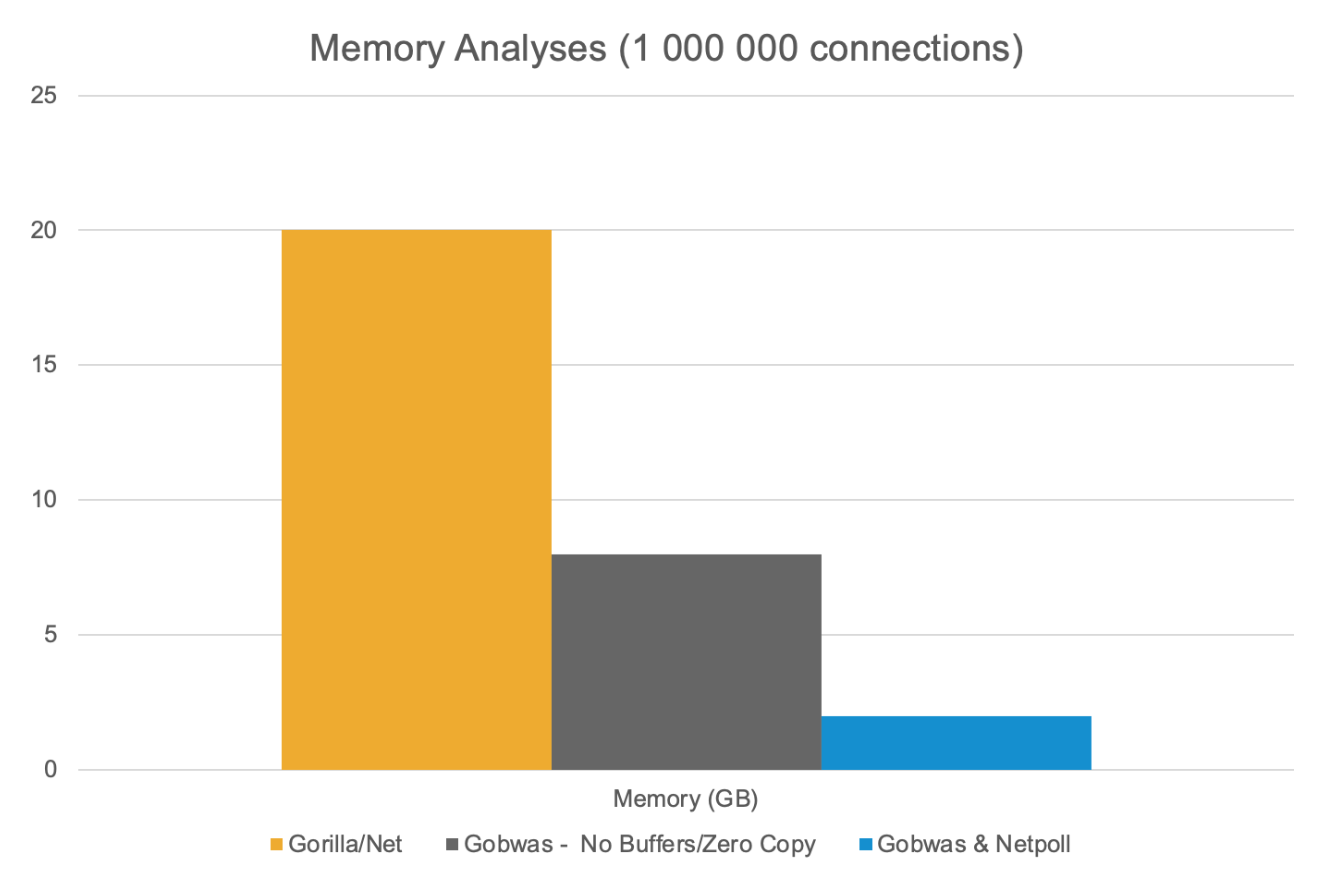

What do you think will happen if this is shipped to the real world with 1 000 000 million of users

Memory = 1000000 * (8KB + 8KB + 4KB) = 20GB

It does not look good as the if we want to scale that would cost us a lot of money for sure

Improvements

- Eliminating the buffers because they are not required, we can just read the data from the stream, without any useless abstraction which does not bring anything. Zero Cost Abstractions

- Eliminating the goroutines because they could be just doing nothing - blocked waiting for message... we want to utilize the resources we have.

- Zero Copy Upgrade - no copying buffers while upgrading HTTP request to WebSocket, which requires additional memory and has performance cost.

Upgrade Request

If we use the standard

net/http a lot of structures are copied on upgrade request which is overkill. Headers are located in http.RequestFor example, the

http.Requestcontains a field with the same-name Header type that is unconditionally filled with all request headers by copying data from the connection to the values strings. Imagine how much extra data could be kept inside this field, for example for a large-size Cookie header.Zero copy upgrade

CPU cycles and memory bandwidth Initial Protocol: Let's say you have a web server that initially serves content using plain HTTP.

Upgrade Request: When a client wants to transition to a different protocol, such as WebSocket, it sends an "upgrade" request to the server. This request typically includes specific headers like Upgrade and Connection.

Zero-Copy: In a zero-copy upgrade, the server doesn't copy the data from the initial connection buffer to a new buffer for the upgraded protocol. Instead, it cleverly uses the existing connection buffer for the new protocol.

For example, if the server is transitioning from HTTP to WebSocket, it can use the same underlying socket connection and buffer to handle WebSocket frames without copying the data to a new buffer.

Efficiency: This technique is highly efficient because it avoids the need to duplicate data, which can be especially crucial when dealing with a large number of connections, as you mentioned in your question about a million WebSocket connections.

Eliminating Buffers and switching to TCP layer, Zero Copy upgrade

That is essential, and happily we have library which can do it for us gobwas

package main

import (

"github.com/gobwas/ws"

"github.com/gobwas/ws/wsutil"

"log"

"net/http"

_ "net/http/pprof"

"syscall"

)

func main() {

// Increase resources limitations

var rLimit syscall.Rlimit

if err := syscall.Getrlimit(syscall.RLIMIT_NOFILE, &rLimit); err != nil {

panic(err)

}

rLimit.Cur = rLimit.Max

if err := syscall.Setrlimit(syscall.RLIMIT_NOFILE, &rLimit); err != nil {

panic(err)

}

// Enable pprof hooks

go func() {

if err := http.ListenAndServe("localhost:6060", nil); err != nil {

log.Fatalf("pprof failed: %v", err)

}

}()

http.ListenAndServe(":9080", http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

conn, _, _, err := ws.UpgradeHTTP(r, w)

if err != nil {

// handle error

}

go func() {

defer conn.Close()

for {

msg, op, err := wsutil.ReadClientData(conn)

if err != nil {

// handle error

}

log.Println(msg)

err = wsutil.WriteServerMessage(conn, op, msg)

if err != nil {

// handle error

}

}

}()

}))

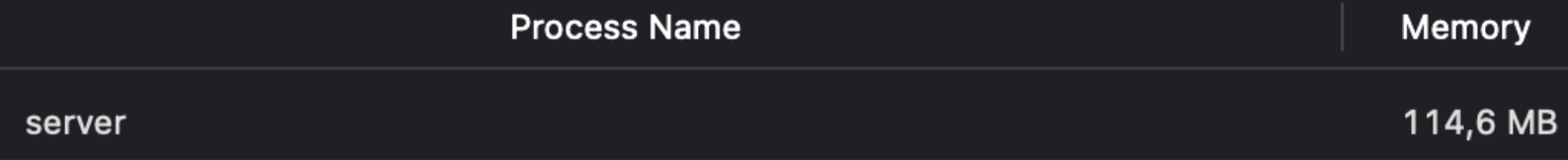

}Results

Decreased the usage to 114MB just by analysing the buffers, eliminating them, making zero copy upgrade and app consumes 3x times less memory

Still we have goroutine per connection

We have eliminated all the useless buffers. If you take a look carefully you will notice that we started using directly the file descriptors on TCP layer

So we have eliminated all useless buffers and we are relying on stream and file descriptors directly which is just fine. You do not have a little abstraction but it is not a big deal as you look at the code, you have already framework for that.

Equation Improvement

We can remove the buffers from it

Memory = conn * (goroutinesMem)

Memory = 16000 * (8KB) = 128000KB = 128MB.

// 1 million connections

Memory = 1000000 * (8KB) = 8GB. 8GB... we can do moreNetpolling

Netpolling Is a technique used in network programming and event-driven systems to efficiently manage and monitor multiple network connections, such as sockets. It involves periodically checking the status of network connections for events or data readiness without relying on blocking calls or continuous polling loops.

- Monitor multiple file descriptors to see whether I/O is possible on any of them.

- Check for events or data readiness on connections.

You can find details here

We are going to use gnet for the purpose as well as gobwas as we have already improved our performance with it. There are other frameworks which can be appropriate if you want to use netpoll, of course every one of them with some library specifics

package main

import (

"flag"

"fmt"

"log"

"net/http"

"sync/atomic"

"syscall"

"time"

_ "net/http/pprof"

"github.com/gobwas/ws/wsutil"

"github.com/panjf2000/gnet/v2"

"github.com/panjf2000/gnet/v2/pkg/logging"

)

type wsServer struct {

gnet.BuiltinEventEngine

addr string

multicore bool

eng gnet.Engine

connected int64

}

func (wss *wsServer) OnBoot(eng gnet.Engine) gnet.Action {

wss.eng = eng

logging.Infof("echo server with multi-core=%t is listening on %s", wss.multicore, wss.addr)

return gnet.None

}

func (wss *wsServer) OnOpen(c gnet.Conn) ([]byte, gnet.Action) {

c.SetContext(new(wsCodec))

atomic.AddInt64(&wss.connected, 1)

return nil, gnet.None

}

func (wss *wsServer) OnClose(c gnet.Conn, err error) (action gnet.Action) {

if err != nil {

logging.Warnf("error occurred on connection=%s, %v\n", c.RemoteAddr().String(), err)

}

atomic.AddInt64(&wss.connected, -1)

//logging.Infof("conn[%v] disconnected", c.RemoteAddr().String())

return gnet.None

}

func (wss *wsServer) OnTraffic(c gnet.Conn) (action gnet.Action) {

ws := c.Context().(*wsCodec)

if ws.readBufferBytes(c) == gnet.Close {

return gnet.Close

}

ok, action := ws.upgrade(c)

if !ok {

return

}

if ws.buf.Len() <= 0 {

return gnet.None

}

messages, err := ws.Decode(c)

if err != nil {

log.Println(err)

return gnet.Close

}

if messages == nil {

return

}

for _, message := range messages {

err = wsutil.WriteServerMessage(c, message.OpCode, message.Payload)

if err != nil {

log.Println(err)

return gnet.Close

}

}

return gnet.None

}

func (wss *wsServer) OnTick() (delay time.Duration, action gnet.Action) {

//logging.Infof("[connected-count=%v]", atomic.LoadInt64(&wss.connected))

return 3 * time.Second, gnet.None

}

type wsCodec struct {

upgraded bool

buf bytes.Buffer

wsMsgBuf wsMessageBuf

}

type wsMessageBuf struct {

firstHeader *ws.Header

curHeader *ws.Header

cachedBuf bytes.Buffer

}

type readWrite struct {

io.Reader

io.Writer

}

func (w *wsCodec) upgrade(c gnet.Conn) (ok bool, action gnet.Action) {

if w.upgraded {

ok = true

return

}

buf := &w.buf

tmpReader := bytes.NewReader(buf.Bytes())

oldLen := tmpReader.Len()

hs, err := ws.Upgrade(readWrite{tmpReader, c})

skipN := oldLen - tmpReader.Len()

if err != nil {

log.Println(err)

if err == io.EOF || err == io.ErrUnexpectedEOF {

return

}

buf.Next(skipN)

logging.Infof("conn[%v] [err=%v]", c.RemoteAddr().String(), err.Error())

action = gnet.Close

return

}

buf.Next(skipN)

logging.Infof("conn[%v] upgrade websocket protocol! Handshake: %v", c.RemoteAddr().String(), hs)

if err != nil {

logging.Infof("conn[%v] [err=%v]", c.RemoteAddr().String(), err.Error())

action = gnet.Close

return

}

ok = true

w.upgraded = true

return

}

func (w *wsCodec) readBufferBytes(c gnet.Conn) gnet.Action {

size := c.InboundBuffered()

buf := make([]byte, size, size)

read, err := c.Read(buf)

if err != nil {

logging.Infof("read err! %w", err)

return gnet.Close

}

if read < size {

//logging.Infof("read bytes len err! size: %d read: %d", size, read)

return gnet.Close

}

w.buf.Write(buf)

return gnet.None

}

func (w *wsCodec) Decode(c gnet.Conn) (outs []wsutil.Message, err error) {

messages, err := w.readWsMessages()

if err != nil {

logging.Infof("Error reading message! %v", err)

return nil, err

}

if messages == nil || len(messages) <= 0 {

return

}

for _, message := range messages {

if message.OpCode.IsControl() {

err = wsutil.HandleClientControlMessage(c, message)

if err != nil {

log.Println(err)

return

}

continue

}

if message.OpCode == ws.OpText || message.OpCode == ws.OpBinary {

outs = append(outs, message)

}

}

return

}

func (w *wsCodec) readWsMessages() (messages []wsutil.Message, err error) {

msgBuf := &w.wsMsgBuf

in := &w.buf

for {

if msgBuf.curHeader == nil {

if in.Len() < ws.MinHeaderSize {

return

}

var head ws.Header

if in.Len() >= ws.MaxHeaderSize {

head, err = ws.ReadHeader(in)

if err != nil {

log.Println(err)

return messages, err

}

} else {

tmpReader := bytes.NewReader(in.Bytes())

oldLen := tmpReader.Len()

head, err = ws.ReadHeader(tmpReader)

skipN := oldLen - tmpReader.Len()

if err != nil {

log.Println(err)

if err == io.EOF || err == io.ErrUnexpectedEOF {

return messages, nil

}

in.Next(skipN)

return nil, err

}

in.Next(skipN)

}

msgBuf.curHeader = &head

err = ws.WriteHeader(&msgBuf.cachedBuf, head)

if err != nil {

log.Println(err)

return nil, err

}

}

dataLen := (int)(msgBuf.curHeader.Length)

if dataLen > 0 {

if in.Len() >= dataLen {

_, err = io.CopyN(&msgBuf.cachedBuf, in, int64(dataLen))

if err != nil {

log.Println(err)

return

}

} else {

fmt.Println(in.Len(), dataLen)

logging.Infof("incomplete data")

return

}

}

if msgBuf.curHeader.Fin {

messages, err = wsutil.ReadClientMessage(&msgBuf.cachedBuf, messages)

if err != nil {

log.Println(err)

return nil, err

}

msgBuf.cachedBuf.Reset()

} else {

logging.Infof("The data is split into multiple frames")

}

msgBuf.curHeader = nil

}

}

func main() {

//Increase resources limitations

var rLimit syscall.Rlimit

if err := syscall.Getrlimit(syscall.RLIMIT_NOFILE, &rLimit); err != nil {

panic(err)

}

rLimit.Cur = rLimit.Max

if err := syscall.Setrlimit(syscall.RLIMIT_NOFILE, &rLimit); err != nil {

panic(err)

}

// Enable pprof hooks

go func() {

if err := http.ListenAndServe("localhost:6060", nil); err != nil {

log.Fatalf("pprof failed: %v", err)

}

}()

var port int

var multicore bool

// Example command: go run main.go --port 8080 --multicore=true

flag.IntVar(&port, "port", 9080, "server port")

flag.BoolVar(&multicore, "multicore", true, "multicore")

flag.Parse()

wss := &wsServer{addr: fmt.Sprintf("tcp://localhost:%d", port), multicore: multicore}

fmt.Println("start serving")

// Start serving!

log.Println("server exits:", gnet.Run(wss, wss.addr, gnet.WithMulticore(multicore), gnet.WithReusePort(true), gnet.WithTicker(true)))

}

The code is a little bit more complex but most of the stuff can be improved, removed or distributed in other packages

Results

It looks like we have done great job by lowering the usage to 24MB eliminating goroutines which is 10x times less memory than initial setup

Now we have fixed number of goroutines responsible for all 16 000 connection, which we have fixed on default of around 20. In dark red you can observe the pooling stack and goroutines number. We are using netpoll communicating directly with the kernel.

We have no buffers used again because of the last step and gobwas.

Equation Improvement

We can remove the goroutines or leave fixed as they are not part of the exponential equation

Memory = conn + (goroutinesMem * 20)

Memory = 16000KB + (20*8KB) = 160160 = ~20MB

We have started from 320MB for 16 000 connection, and now we are on around 20MB. That will definitely save money on a scale:

Memory = 1000000 conn = ~1GB + fixed goroutines memory (20*8KB)We have started with 20GB for 1 000 000 connection and with the help of open source analysation tools and our skills we have improved our websocket app to use only ~1GB. This value can vary depending on the goroutine pool responsible for handling epoll events

Conclusion

The main focus in the formula is what we have improved (10x by eliminating buffer, goroutines). We do not include how much KB will take one connection so it is not part of the formula. How much take one socket connection could depend on different factors of course which can be added to the formula. In our case we do not care about that because we are not optimizing it.

WebSockets are crucial for real-time web applications, but scaling them is challenging due to their stateful and persistent nature.

Challenges in scaling WebSockets include maintaining persistent connections and synchronizing data between clients.

Vertical scaling has limitations like file descriptor constraints, downtime during upgrades, and single points of failure.

Horizontal scaling introduces complexities in data synchronization, session management, and data integrity.

Shared challenges include managing heartbeats for many concurrent connections.

To address these issues, efficient code using the gobwas/ws library optimizes memory usage and minimizes goroutines per connection, resulting in significant memory savings.

- SAP Managed Tags:

- SAP CodeJam,

- Research and Development

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP

2 -

ABAP Cloud

1 -

BTP

4 -

CAP

4 -

Cloud Foundry

1 -

CodeJam

1 -

Docker

1 -

Exercise Questions

1 -

Exercises

2 -

Integration Suite

1 -

Jenkins

1 -

Kaniko

1 -

Piper

1 -

Post Event

10 -

Pre-Event

3 -

SAP BTP

1 -

SAP Build

1 -

SAP CAP

1 -

SAP Code Jam

1 -

SAP CodeJam

1